Kensuke Harada, Professor from Osaka University in Japan, explains how a humanoid robot searches for an object in our daily environment

In this article, we introduce a biped humanoid robot that can search for our daily environment and grasp the target daily object by using its hand. To realise such a robot, the occlusion and noise of a vision sensor usually make it difficult for the humanoid robot to detect objects.

We introduce POMDP (Partially Observable Markov Decision Process) framework by representing the state of the target object as a probability distribution. After determining the next action, the humanoid robot walks to the next position to observe the objects from different perspectives. After reducing occlusions by merging multiple point clouds from different perspectives, the robot can detect the target object by using the model-based matching method.

Finally, the robot can automatically grasp the target object. We confirmed the effectiveness of our proposed method with an experiment using the biped humanoid robot HRP-2.

Introduction

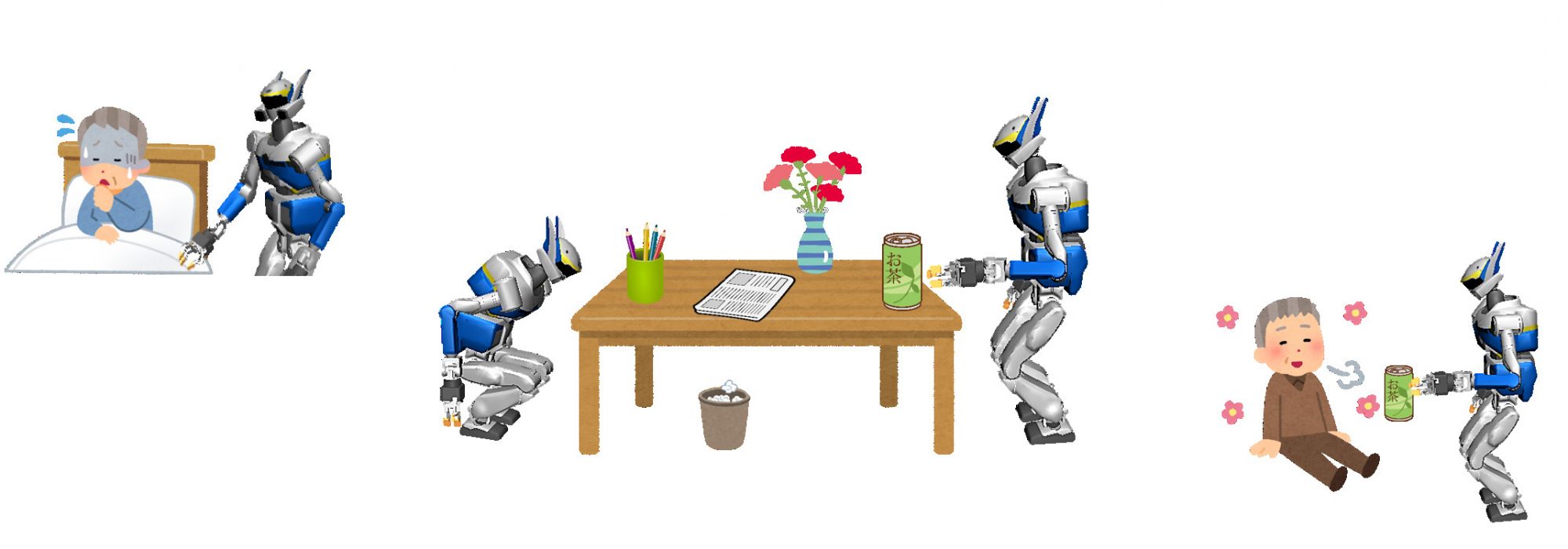

During the coming years, humanoid robots are expected to assist us in our daily life. Let us consider the assisting task of bringing a daily object to a human (Figure. 1). To realise such a task, a humanoid robot has to walk around our daily environment and search for the target object. After detecting the object, the humanoid robot will pick it and bring it to the human. The ultimate goal of our research is to realise such a function of detecting and picking the target daily object by using a biped humanoid robot.

To detect the target object, a RGB-D camera attached to the head of a humanoid robot is used. However, since the sensor data includes much occlusion and noise under a cluttered daily environment, detection of the target object is not easy. To reduce the occlusion included in the point cloud obtained by the RGB-D camera, the humanoid robot has to walk to another position and merge the obtained point cloud to the previously captured point cloud. Here, it becomes important to choose a second viewpoint minimising the occlusion. This results in minimising the number of times the robot captures the point cloud from a different viewpoint.

To cope with this problem, we propose a probabilistic approach to observation planning for a biped humanoid robot. Our method uses POMDP (Partially Observable Markov Decision Process, Kaelbling et al. (1998)) to plan the view pose taking the uncertainty into account. Here, a whole-body motion generation method is used to realise the planned view pose while keeping the balance of the humanoid 29 robot. After planning the view pose, the robot walks to the planned position by using a walking pattern generator and obtains the point cloud by using a RGB-D camera.

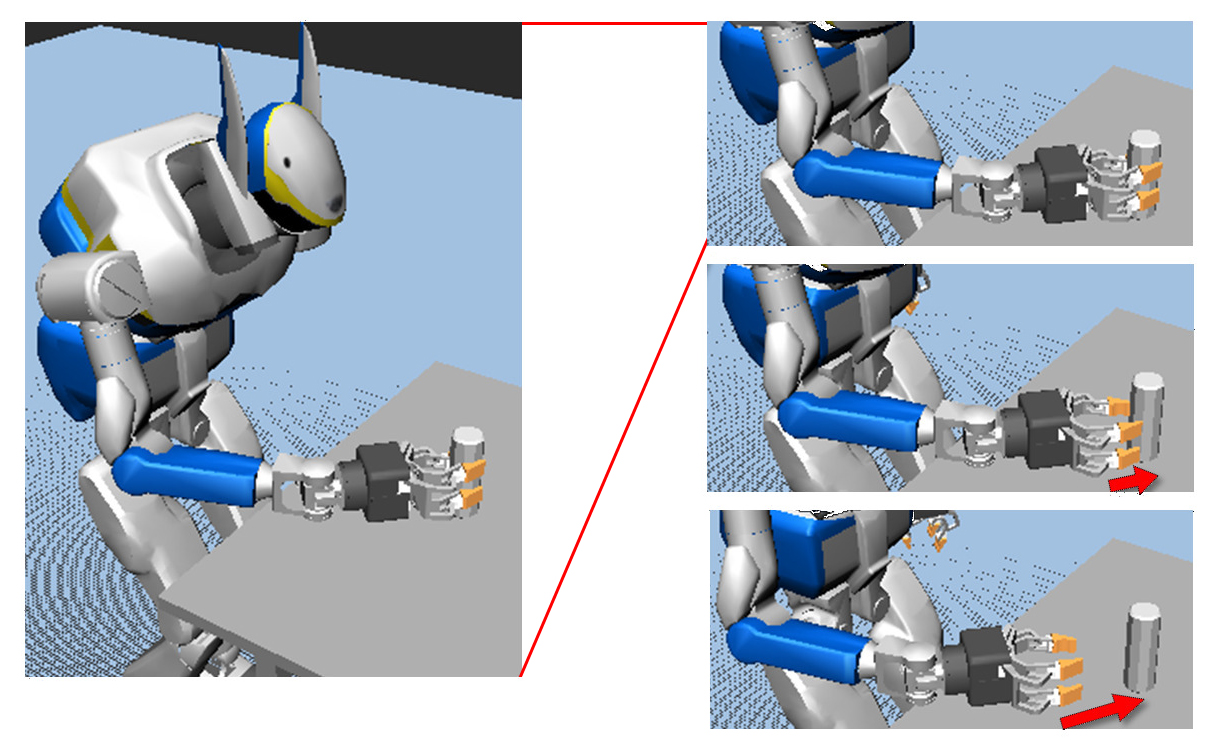

The obtained point cloud is merged to the previously captured point cloud. Then, we try to fit the 3D model of the target object to the point cloud. If the 3D model is well fit to the point cloud, we judge that the object is correctly detected. Then, the humanoid robot tries to grasp the target object where the candidate grasping poses are obtained in advance by using a grasp planner.

Our contributions are two-fold. The primary contribution lies in the proposal of a framework for detecting and picking the target daily object by integrating such individual functions. The secondary contribution is a discretisation of the search space and robot actions to apply the POMDP framework to a real humanoid robot.

Proposed method

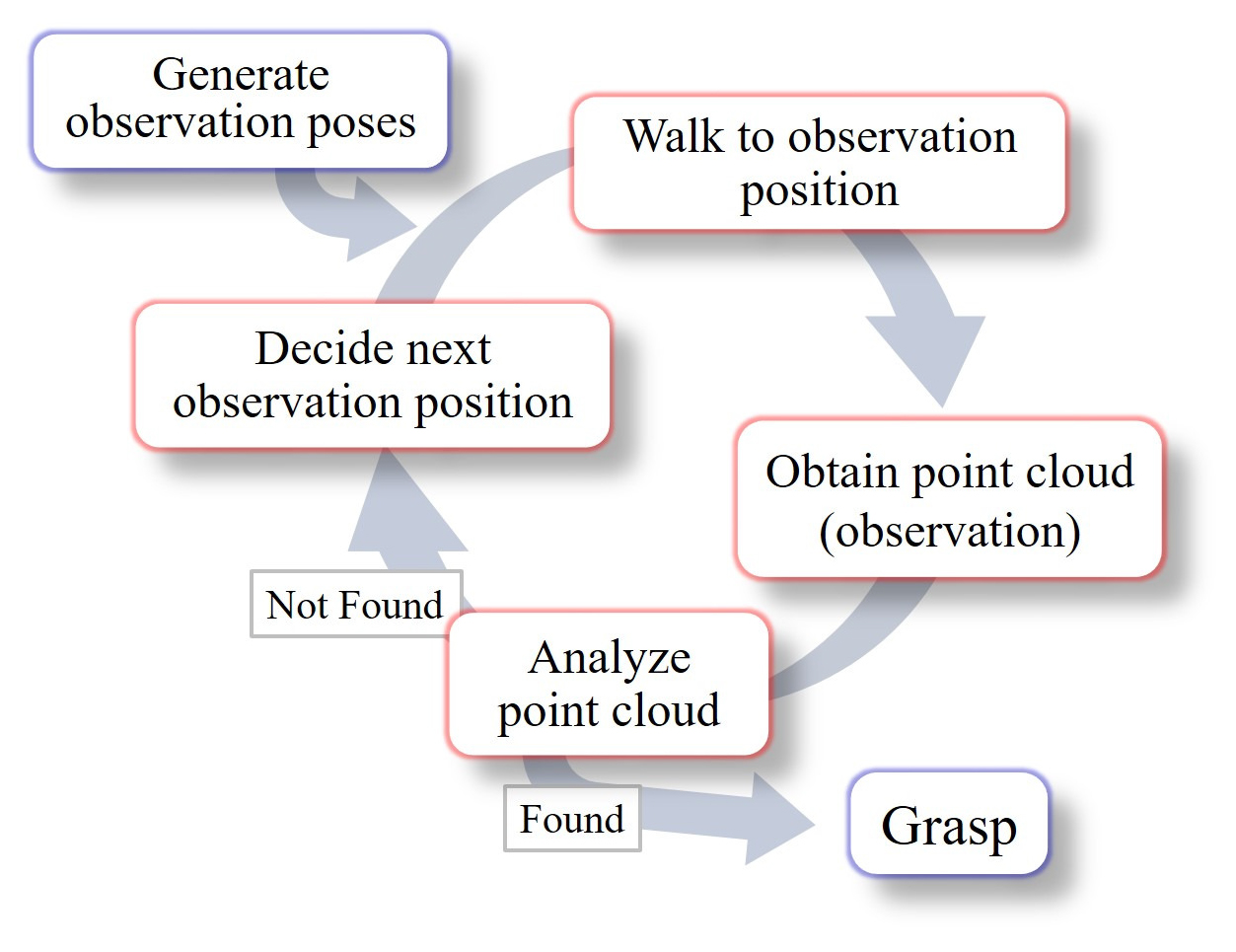

An overview of our system is shown in Figure. 2. The system is composed of an offline and an online phase. In the offline phase, we generate a set of candidate view poses of the robot and a set of candidate grasping poses of the target object by using the gripper. In the online phase, we first search for the view pose by using POMDP. Then, by using a biped walking pattern generator (M.Morisawa et al. (2015)), the robot walks to the target position and takes the selected view pose. After the robot takes the desired view pose, the point cloud is obtained by using the RGB-D camera attached to the head. The obtained point cloud is merged to the previously captured point cloud by using the ICP (Iterative Closest Point). Then, we fit the 3D model of the target object to the point cloud by utilising the CVFH features. We iterate these steps until the 3D model is well fit to the point cloud. After detecting the pose of the target object, the robot grasps the object by selecting one of the candidate grasping poses.

Experiment

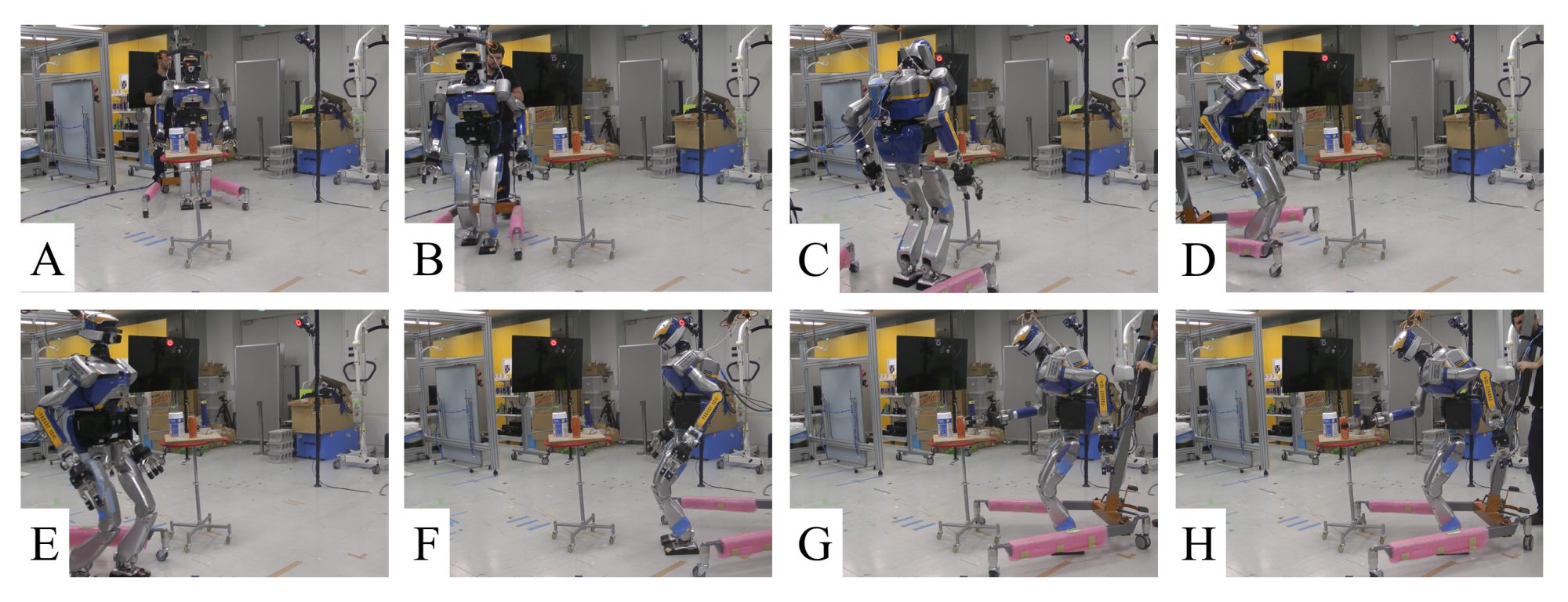

The experimental result is shown in Figure. 4. From the initial state (A), the robot walks (B) to the randomly selected first view pose (C) and captures the point cloud.

By using the POMDP, we update the probability distribution of the object pose. Then, POMDP evaluates all the observation pose candidates and decide the view pose minimising the occlusion.

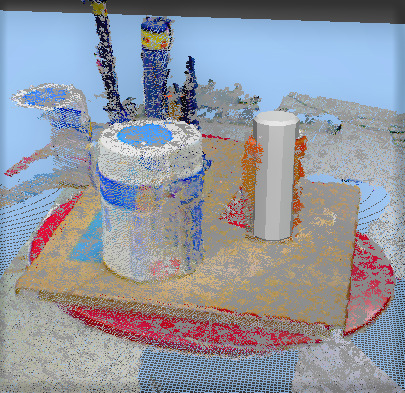

Then, the robot moves the second view pose (D) and captures the point cloud again. The captured point cloud is merged to the previously captured point cloud. POMDP further evaluates all the observation pose candidates and decide the view pose minimising the occlusion again. Since the ICP score is less than the threshold, the robot further walks (E) to the third view pose (F). The merged point cloud captured in this view pose is shown in Figure. 5. This time, the ICP score is larger than the threshold: the robot successfully detects the target can. Among 90 grasping poses of the can, the robot selects the one where the pose of the robot satisfies the joint angle limit, IK, collision-free conditions and balance of the robot. Finally, the robot tries to grasp the object (G and H).

Conclusions

This article presented a humanoid robot that can search for our daily environment and grasps our daily object by using its hand. We introduced POMDP framework as a high-layer planner, and it decided the next viewpoint of the robot.

Thanks to this system, our robot was able to identify the target object in a cluttered scene by changing its viewpoint three times. It also picked up the object after the identification, indicating that the accuracy and robustness of proposed methods.

References

Kaelbling, L. P., Littman, M. L., and Cassandra, A. R. (1998). Planning and acting in partially observable stochastic domains. Artificial Intelligence 101, 99 – 134. doi: https://doi.org/10.1016/S0004-3702(98) 00023-X

M.Morisawa, N.Kita, S.Nakaoka, K.Kaneko, S.Kajita, and F.Kanehiro (2015). Biped locomotion control for uneven terrain with narrow support region, 34–39 doi:10.1109/SII.2014.7028007

Authors

Masato Tsuru1, Pierre Gergondet3, Tomohiro Motoda1,

Adrien Escande2, Ixchel G. Ramirez-Alpizar2, Weiwei Wan1,

Eiichi Yoshida2, and Kensuke Harada1,2

1 Osaka University, Japan.

2 National Inst. Advanced Industrial Science and Technology, Japan.

3 Beijing Institute of Technology, China.

*Please note: this is a commercial profile