Jon Ander Gómez and Monica Caballero, DeepHealth Technical Manager and Project Coordinator, lift the lid on an exciting project that concerns deep-learning and high-performance computing to boost biomedical applications for health

Healthcare is one of the key sectors of the global economy, making any improvement in healthcare systems to have a high impact on the welfare society. European public health systems are generating large datasets of biomedical data, in particular, images that constitute a large unexploited knowledge database, since most of its value comes from the interpretations of the experts. Nowadays, this process is still performed manually in most cases. The use of technologies, such as the recent trend on bringing together high performance computing (HPC) with big data technologies, as well as the application of deep learning (DL) and artificial intelligence (AI) techniques can overcome this issue and foster innovative solutions, in a clear path to more efficient healthcare, benefitting both people and public budgets.

In this scenario, the DeepHealth project, funded from the European Union’s Horizon 2020 programme, tackles real needs of the health sector with the aim to facilitate the daily work of medical personnel and related IT experts in terms of image processing and the use and training of predictive models.

The main goal of the DeepHealth project is to put HPC computing power at the service of biomedical applications and through an interdisciplinary approach, apply deep learning and computer vision techniques on large and complex biomedical datasets to support new and more efficient ways of medical diagnosis, monitoring and treatment of diseases. In line with the main goal, one of the most important challenges of the DeepHealth project is to design and implement distributed versions of the training algorithms, to efficiently exploit hybrid HPC + Big Data computing architectures by using the data parallelism programming paradigm.

To fulfil this goal the DeepHealth Consortium, coordinated by everis and technically led by the Technical University of Valencia (UPV), involves 21 partners from nine European countries, gathering a multidisciplinary group from research and health organisations as well as large and SME industrial partners.

The DeepHealth toolkit: A key open-source asset for eHealth AI-based solutions

At the centre of the DeepHealth proposed innovations is the DeepHealth toolkit, open-source free software that will provide a unified framework to exploit heterogeneous HPC and big data architectures assembled with deep learning and computer vision capabilities to optimise the training of predictive models. The toolkit is composed of two core libraries, the European Distributed Deep Learning Library (EDDLL) and the European Computer Vision Library (ECVL) and a dedicated front-end. The front-end will provide a web-based graphical user interface for facilitating the use of the functionalities provided by the core libraries to technical people, mainly computer and data scientists, without a profound knowledge of Deep Learning and Computer Vision.

EDDLL and ECVL development are led by The Pattern Recognition and Human Language Technology (PRHLT) research centre of the UPV and the Dipartamento di Ingegneria “Enzo Ferrari” of the Università degli studi di Modena e Reggio Emilia, respectively. The Department of Computer Science from the University of Torino and the Barcelona Supercomputing Center are also strongly contributing to the adaptation of the libraries to HPC and cloud environments.

The toolkit will contribute to reduce the gap between the availability of cutting-edge technologies and its extensive use for medical imaging, through its integration in current and new biomedical platforms or applications.

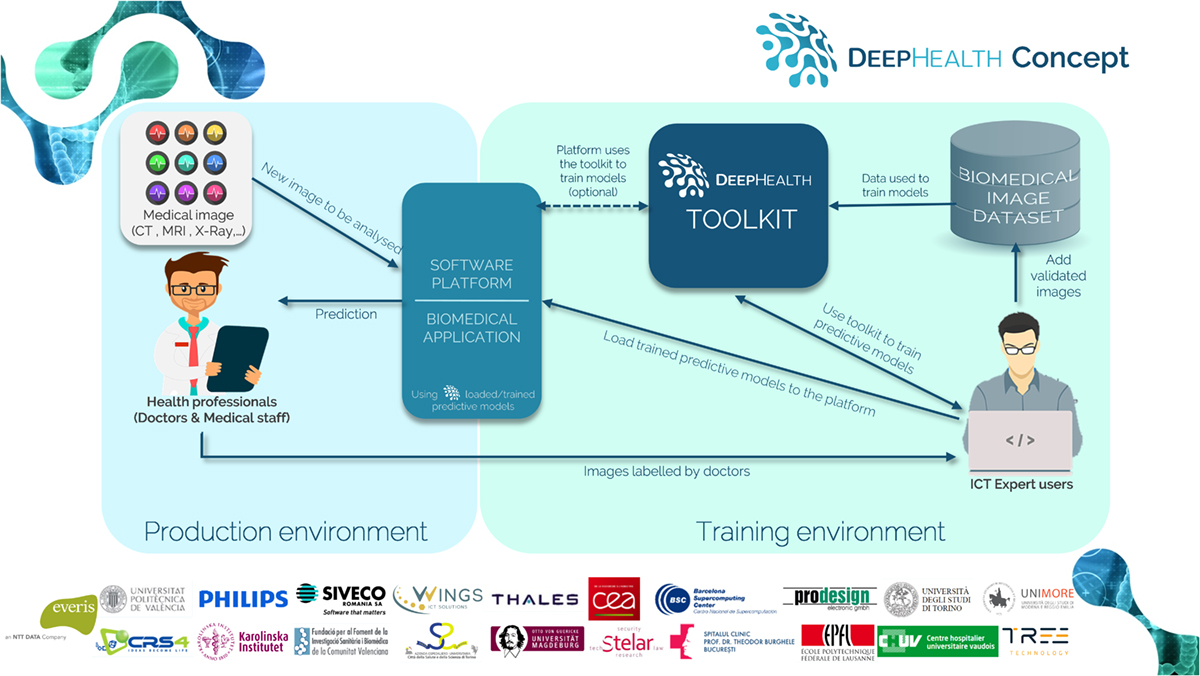

The DeepHealth concept – application scenarios

The DeepHealth concept (depicted in the Figure) focuses on scenarios where image processing is needed for diagnosis. The training environment represents where IT expert users work with datasets of images for training predictive models. The production environment is where the medical personnel ingests an image coming from a scan into a software platform or biomedical application that uses predictive models to get clues that can help them to make decisions during diagnosis. Doctors have the knowledge to label images, define objectives and provide related metadata. IT staff are in charge of processing the labelled images, organise the datasets, perform image transformations when required, train the predictive models and load them in the software platform. The DeepHealth toolkit will allow the IT staff to train models and run the training algorithms over hybrid HPC + big data architectures without a profound knowledge of deep learning, HPC or big data and increase their productivity reducing the required time to do it. This process is transparent to doctors; they just provide images to the system and get predictions such as indicators, biomarkers or the segmentation of an image for identifying tissues, bones, nerves, blood vessels, etc.

14 pilots and seven platforms to validate the DeepHealth proposed innovations

The DeepHealth innovations will be validated in 14 pilot test-beds through the use of seven different biomedical and AI software platforms provided by partners. The use cases cover three main areas: (i) Neurological diseases, (ii) Tumour detection and early cancer prediction and (iii) Digital pathology and automated image annotation. The pilots will allow evaluating the performance of the proposed solutions in terms of the time needed for pre-processing images, the time needed to train models and the time to put models in production. In some cases, it is expected to reduce these times from days or weeks to just hours.

Advances made so far and upcoming news and results

DeepHealth started on January 1st, 2019 and during the first few months, relevant advances have been made mainly on fine-tuning the 14 use-cases and the specifications and requirements of the platforms supporting them and on defining the libraries’ APIs and the infrastructure requirements. Also important is the definition of ethical and data privacy requirements to deal with medical data. Please, follow us closely since in the upcoming months, it is expected the first public release of the EDDLL and ECVL.

This project has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No 825111, DeepHealth Project.

Key facts:

- ICT-11-2018-2019 – HPC and Big Data enabled Large-scale Test-beds and Applications

- Budget: ~€14.64 million – EC funding = ~€12.77 million

- Duration: 36 months (Jan 2019 – Dec 2021)

- DeepHealth project

Please note: This is a commercial profile