Chris Rourk, Partner at Jackson Walker, a member of Globalaw, talks us through the realities of attempting to create laws on artificial intelligence

Regulation is an industry, but effective regulation is an art. There are a number of recognised principles that should be considered when regulating an activity, such as efficiency, stability and regulatory structure, general principles, and the resolution of conflicts between these various competing principles. With the regulation of artificial intelligence (AI) technology, a number of factors make the centralised application of these principles difficult to realise – but AI should be considered as a part of any relevant regulatory regime.

We need to define what is meant by AI

Because AI technology is still developing, it is difficult to discuss the regulation of AI without reference to a specific technology, field or application where these principles can be more readily applied. For example, optical character recognition (OCR) was considered to be AI technology when it was first developed, but today, few would call it AI.

More commonly accepted examples of AI technology today include:

• Predictive technology for marketing and for navigation;

• Technology for ridesharing applications;

• Commercial flights routing;

• And even email spam filters.

These technologies are as different from each other as they are from OCR technology. This demonstrates why the regulation of AI technology (from a centralised regulatory authority or based on a centralised regulatory principle) is unlikely to truly work.

Efficiency-related principles include the promotion of competition between participants by avoiding restrictive practices that impair the provision of new AI-related technologies. This subsequently lowers barriers of entry for such technologies, providing the freedom of choice between AI technologies and creating competitive neutrality between existing AI technologies and new AI technologies (i.e. a level playing field). OCR technology was initially unregulated, at least from a central authority, and it was therefore allowed to develop and become faster and more efficient, even though there are many situations where OCR documents contained a large number of errors.

In a similar manner, a centralised regulation regime that encompasses all uses of AI mentioned above from a central authority or based on a single focus (e.g. avoiding privacy violations) would be inefficient.

The reason for this inefficiency is clear: the function and markets for these technologies are unrelated.

The potential failures of strict, centralised AI regulation

Strict regulations that require all AI applications to evaluate and protect the privacy of users might not only result in the failure to achieve any meaningful goals to protect privacy, but could also render those AI applications commercially unacceptable for reasons that are completely unrelated to privacy. For example, a regulation that requires drivers to be assigned based on privacy concerns could result in substantially longer wait times for riders if the closest drivers have previously picked up the passenger at that location. However, industry-specific regulation to address privacy issues might make sense, depending on the specific technology and specific concern within that industry.

Stability-related principles include providing incentives for the prudent assessment and management of risk, such as minimum standards, the use of regulatory requirements that are based on market values and taking prompt action to accommodate new AI technologies.

Using OCR as an example, if minimum standards for an acceptable number of errors in a document had been implemented, then the result would have been difficult to police, because documents have different levels of quality and some documents would no doubt result in less errors than others. In the case of OCR, the market was able to provide sufficient regulation, as companies competed with each other for the best solution, but for other AI technologies there may be a need for industry-specific regulations for ensuring minimum standards or other stability-related principles.

In regard to regulatory structure, these include following a functional/institutional approach to regulation, coordinating regulation by different agencies, and using a small number of regulatory agencies for any regulated activity. In that regard, there is no single regulatory authority that could implement and administer AI regulations across all markets, activities and technologies, or that would add a new regulatory regime to the ones already in place.

An example of existing tech regulations that focus on one thing

For example, in the US many state and federal agencies have OCR requirements that centre on specific technologies/software for document submission, and software application providers can either make their application compatible with those requirements or can seek to be included on a list of allowed applications. They do the latter by working with the state or federal agency to ensure that documents submitted using their applications will be compatible with the agency’s uses. For other AI technologies there may be similarly industry-specific regulations that make sense in the context of the existing regulatory structure for that industry.

General principles of regulation include identifying the specific objectives of a regulation, cost-effectiveness, equitable distribution of the regulation costs , flexibility of regulation and a stable relationship between the regulators and regulated parties. Some of these principles could have been implemented for OCR, such as a specific objective in the terms of a number of errors per page. However, the other factors would have been more difficult to determine, and again would depend on an industry- or market-specific analysis. For many specific applications in specific industries, these factors were able to be addressed even though an omnibus regulatory structure was not implemented.

What should makers of AI regulation think about?

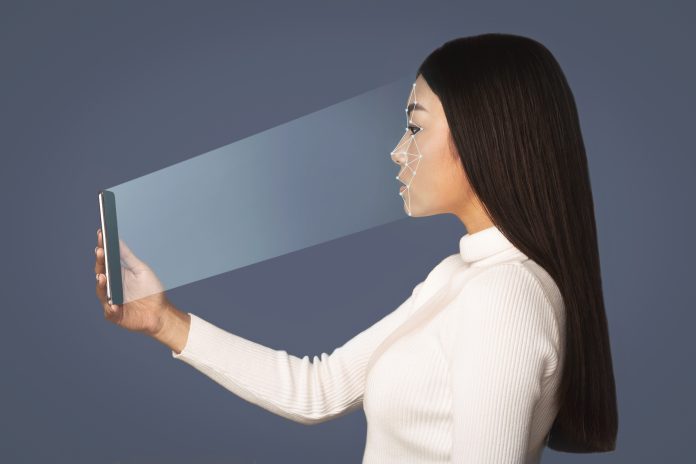

Preventing conflict between these different objectives requires a regime in which these different objectives can be achieved. For AI that would require an industry- or market-specific approach, and in the US, that approach has generally been followed for all AI-related technologies. As discussed, OCR-related technology is regulated by specific federal, state and local agencies as it pertains to their specific mission. Another AI technology is facial recognition, and a regulatory regime of federal, state and local regulation is in progress. The facial recognition technology space has been used by many of these authorities for different applications, with some recent push-back on the use of the technology by privacy advocates.

It is only when conflicts develop between such different regimes that input from a centralised authority may be required.

In the United States, an industry- and market-based approach is generally being adopted. In the 115th Congress, thirty-nine bills were introduced that had the phrase “artificial intelligence” in the text of the bill, and four were enacted into law. A large number of such bills were also introduced in the 116th Congress. As of April 2017, twenty-eight states had introduced some form of regulations for autonomous vehicles, and a large number of states and cities have proposed or implemented regulations for facial recognition technology.

While critics will no doubt assert that nothing much is being done to regulate AI, a simplistic and heavy-handed approach to AI regulation, reacting to a single concern such as privacy is unlikely to satisfy these principles of regulation, and should be avoided. Artificial intelligence requires regulation with real intelligence.

By Chris Rourk, Partner at Jackson Walker, a member of Globalaw.