Dr Lorraine Dodd of Bioss International discusses understanding the limitations of AI agents in terms of their potential for choice-making, and explains how the study of choose-ables could enhance them

Artificial Intelligence (AI) agents are becoming part of our everyday life; from algorithms that make assessments about us (e.g. our eligibility for taking out insurance) through to decision-agents that give us solutions to specific problems (e.g. remote diagnosis). In each case, the AI agents are working with a prescribed range of options, using a tailored set of inputs processed through an internal ‘logic’ that assigns values to each of the prescribed outputs so that a ‘choice’ can be presented.

The scope of the options tends to be pre-set and finite, and the nature of the options is based on some kind of response. The question then is: can AI agents work with option sets that are open-ended and whose nature is something more than responsive and objective? Furthermore, could AI agents support more subjective judgements whose nature concerns concepts of belief and value; or self and other?

These general questions are particularly relevant as the world moves more towards a mixing of AI agents and people acting in collaboration with each other. Then there are questions around limits of (and abdication of) agency, authority, and accountability, such that some kind of formal systemic structural logic is needed to address these questions. (1)

For example, when might it be acceptable (and according to whom, what, why?) to grant full agency and authority to AI agents over their human counterparts? In what contexts? What assumed capacity for imaginative potential might such AI agents be expected or required to have? What are the natures and extents of their sensing and sense-making frames of reference? How might these be shaped by the AI agent’s nature and scope of choice options?

It is important that we pose such questions for the purpose of understanding the limitations of AI agents in terms of their potential for choice-making. Choice-making is more than decision-making or choice selection; it is what determines the scope and nature of choice options in the first place. Before an AI agent can choose, it needs an assembly of a list of things to choose from, which forms an agent’s potential. In the case of people, these choice options are subject to our context and our desires, beliefs and motivations.

The concept of ‘choose-ables’

The economist George L S Shackle defined the concept of ‘choose-ables’ as the “imagined deemed possible.” (2) They are the possible ways forward that can be imagined, deemed acceptable (e.g., within their moral code), and considered open to them; also, what they feel obliged to consider, or believe they are barred from considering (e.g., social taboos), and what they are (or not) competent to carry out. Choose-ables are influenced by the person’s circumstances and their social conditioning. Consequently, choose-ables are subjective and personal. How might the study of choose-ables enhance AI agents?

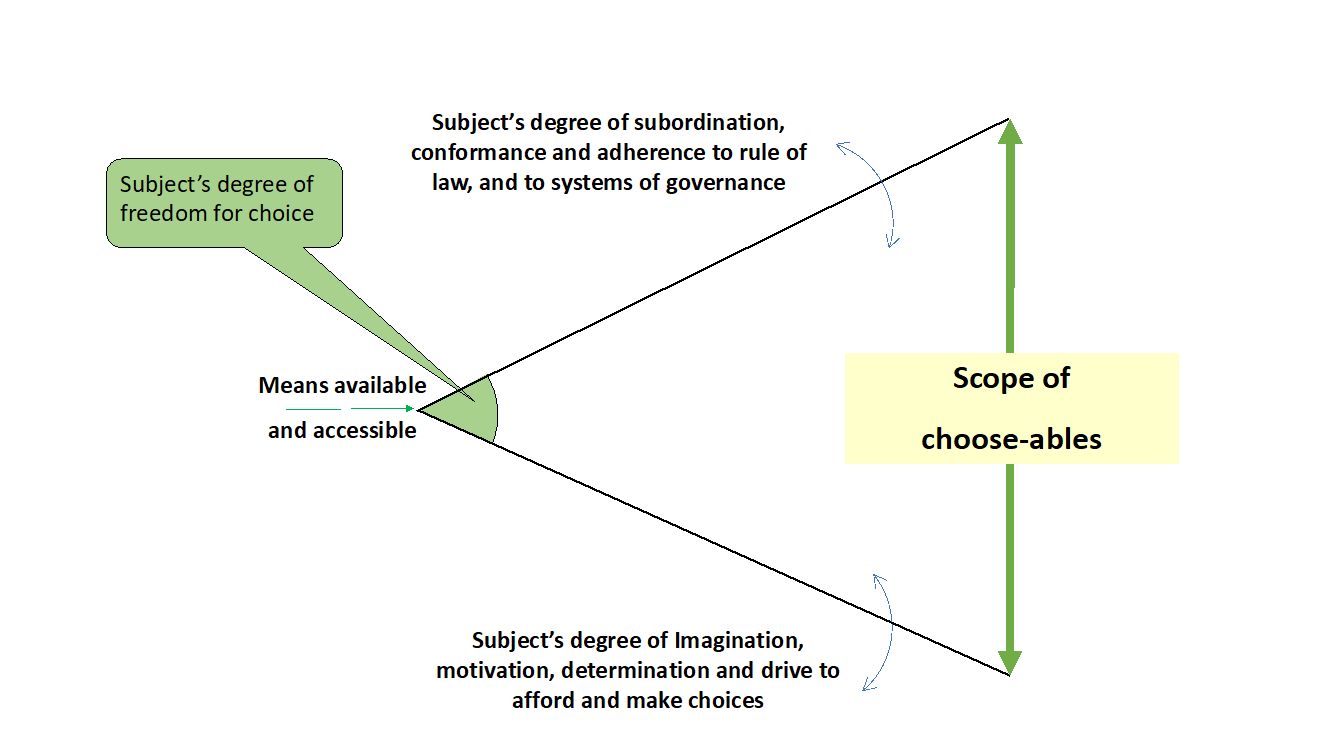

In AI agent development, it is the developer that determines the scope of the agent’s choices, most probably without any formal reference to an organising context. Figure 1 illustrates the relationship between an agent’s context and their scope of choose-ables, which is an emergent property of three ‘affordance’ factors:

- An agent’s degree of conformance, adherence to laws, rules, customs, etc.

- An agent’s degree of will and motivation (i.e. drive to extend or limit choices given subjective needs and context).

- Means available and accessible (i.e. feasibility check on choose-ables based on capability). (3)

All of these combine to shape the agent’s potential in terms of choose-able ways forward. The concept of affordance and scope of choose-ables extends the development of AI agents into areas that are not just about being responsive and active; for example, being reflective.

The nature of an agents’ choose-ables will tend to be restricted to a lower-level of cognitive capability due to the binary nature of digital computing. The subjective, relative study based around choose-ables offers a way of positioning an AI agent’s autonomy appropriately in terms of the agent’s limited capacity for conception of their context and circumstances.

This becomes increasingly relevant with the development of advisory AI agents and also for clarifying the cognitive capability and positioning of autonomous AI agents. Boden presents a typology for different degrees and gradations of autonomy and identifies three dimensions according to which an agent’s autonomy can be judged:

- The extent to which an agent’s responses to external stimuli are purely reactive or mediated by inner mechanisms being partly dependent on an agent’s history of sensory input and/or internal state.

- The extent to which an agent’s inner control mechanisms have been self-generated or self-organised rather than been externally imposed.

- The extent to which a system’s inner directing mechanisms can be reflected upon and/or selectively modified, by the individual concerned. (4)

The third dimension above adopts a different kind of subjective language and is about an agent’s capacity for reflection and modification based on their ‘understanding’ of contextual dynamics. This third dimension relates degrees of agent autonomy to perception and action in embodied agents:

“It remains as a significant research challenge to uncover the specific mechanisms by which circular causality and allostasis arise in natural agents and how — and to what degree — they might be replicated in artificial systems.” (5)

The reference to circular causality hints at a need for a more holistic study of choose-ables, which is relational and conditional in nature, and less about ‘if-then/else’ causality. Without such a study, AI agents will be entering into our organisations and daily lives lacking the vital formal inquiry into their cognitive capacity and their potentially dangerous ineptitude for making choices.

References

- For more detail see http://www.bioss.com/ai/

- Ebeling (1983) An Interview with G L S Shackle. Austrian Economics Newsletter Vol 4 (1) https://mises-media.s3.amazonaws.com/aen4_1_1_1.pdf

- Dodd L (2018b) Choice-making and choose-ables: making decision agents more human and choosy. European Journal on Decision Processes. Vol 7, Issue 1–2, pp101–115 https://doi.org/10.1007/s40070-018-0092-5

- Boden M A (1996) Autonomy and Artificiality. In: Boden M A (ed) The Philosophy of Artificial Life. Oxford University Press, New York, pp. 95–108.

- Vernon D, Lowe R, Thill S and Ziemke T (2015) Embodied cognition and circular causality: on the role of constitutive autonomy in the reciprocal coupling of perception and action. Front. Psychol. 6:1660. doi: 10.3389/fpsyg.2015.01660

*Please note: This is a commercial profile