Zeyuan Chen and Christopher G. Healey from North Carolina State University reveal their institution’s expertise on virtual and augmented reality (VR and AR) systems

Virtual and augmented reality (VR and AR) systems provide more immersive experiences for users to explore and interact with the three-dimensional (3D) world, versus traditional display devices like monitors. One of the essential parts of VR/AR systems is their controllers, which are responsible for manipulation of 3D information.

For instance, users may want to inspect computer-aided design (CAD) models from different angles or control video game characters naturally and intuitively. 3D interaction tasks such as rotation and docking are challenging with conventional 2D input devices. Six Degree of Freedom (6DoF) input devices tend to be more effective, but are also difficult to design.

There are a variety of 6DoF devices available that roughly fall into two groups: camera based and non-camera based systems. There has been extensive work on developing 6DoF devices using visible light or infrared camera data, such as the video mouse, SideSight, and HoverFlow. This group of controllers are mostly free-moving devices since they allow users to manipulate 3D information through the rotation and translation of the controllers in free space.

It is generally very easy and natural to learn to use a free-moving device because of the direct mapping from the absolute pose of the device to the virtual objects. But, this kind of device is generally very complex to design. For instance, each HTC Vive controller contains 24 sensors and must be tracked by two external base cameras for accurate pose estimation.

An alternative is to build an input system with a single camera, which is much cheaper than other sensor-based or multi-camera systems. However, the design is more challenging. Many systems require only regular RGB cameras. For instance, MagicMouse and ARToolKit estimate pose by tracking monochrome 2D markers, which work well if the rotation range is within 180 degrees. Some other approaches make use of colour information to achieve better performance and can track rigid (e.g. cubes) and non-rigid bodies (e.g. hands) with high accuracy and low latency.

Another branch of research uses RGB plus depth (RGBD) images captured by devices like the Microsoft Kinect as input for tracking, but they are often unable to achieve high accuracy because of the relatively low precision of the depth cameras.

Of the wide range of non-camera based controllers, desktop isometric devices are the most common. For instance, 3Dconnexion’s SpaceNavigator is a desktop 3D mouse that allows users to manipulate 3D objects by controlling its pressure-sensitive handle.

Unfortunately, users often need significant practice and training, up to several hours, before they are comfortable with this type of device.

Another popular branch of non-camera based controllers are cube-based systems, which use embedded sensors and provide tangible user interfaces. The advantage of this type of device is that the cubes can be aligned with flat surfaces in a stable way and 3D cardinal directions can be recognised more easily in the cube coordinate system.

VR and AR systems are providing new ways to view and manipulate 3D objects. One of the essential problems is to design efficient, accurate, yet affordable controllers. Some basic interaction tasks like 3D object rotation are challenging with conventional 2D input devices. 6DoF devices are much more effective for 3D information manipulation. However, popular 6DoF devices are often hard to use, expensive and suffer from high latency or low accuracy.

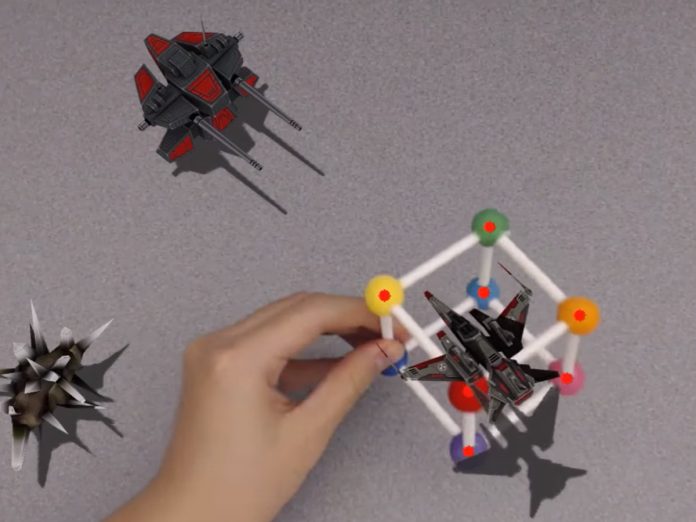

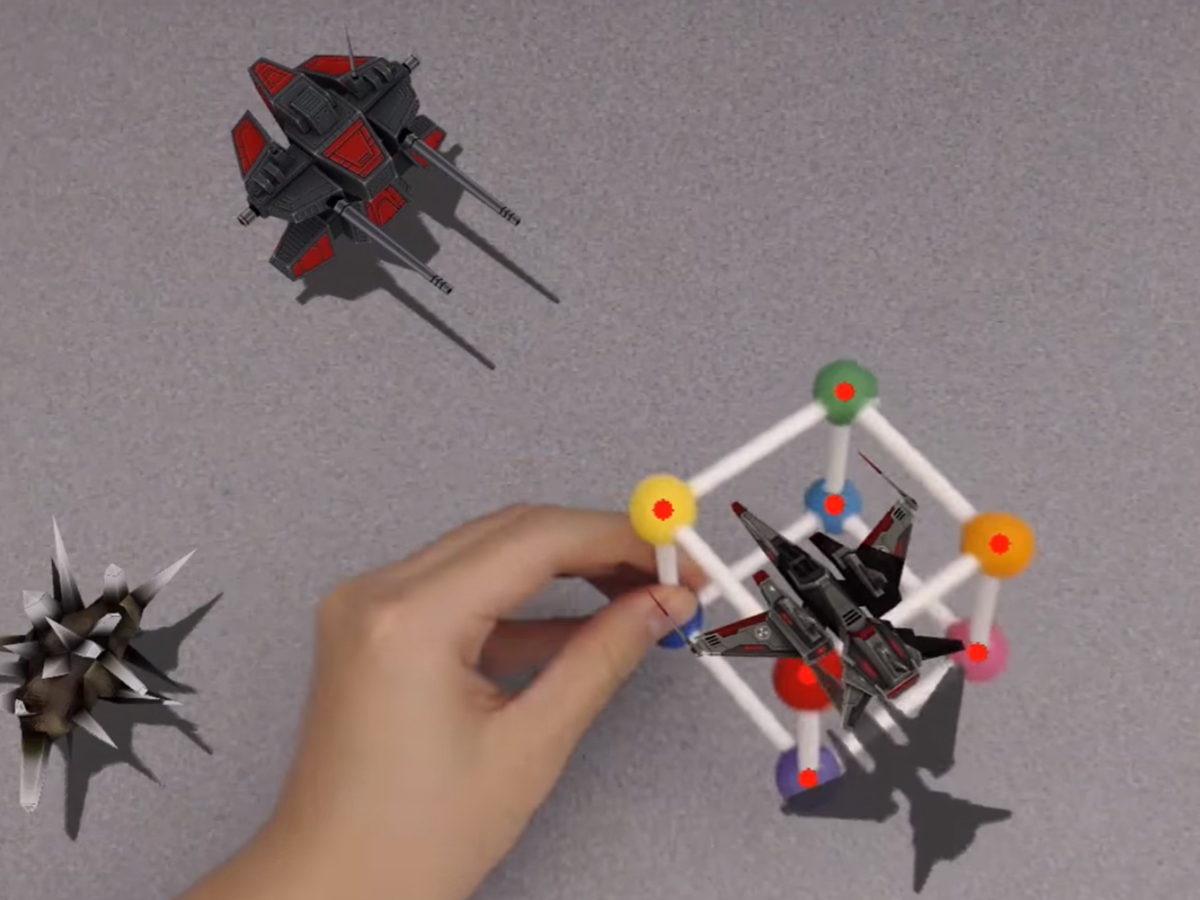

We have developed a tangible 6DoF input device that is cheap and natural to use to support manipulation of 3D information [CHA17]. The system includes a single RGB camera and a 3D-printed wireframe cube (Figure 1). The pose of the cube is estimated with computer vision algorithms so that when the cube is translated or rotated by the user, the 6DoF information changes to follow.

Figure 1 shows an example of using our device for AR game design. When a user translates or rotates the physical cube, the virtual object (e.g. the fighter) changes to follow; see the online video demonstration at https://youtu.be/gRN5bYtYe3M .

The processing pipeline consists of two phases: location and estimation. In the location phase, the minimum region that covers the cube is detected based on the overall shape of the cube, as well as correlation between video frames. In the estimation phase, the accurate locations of cube corners are computed and recognised based on colour. The pose of a cube is estimated by solving the correspondences of the detected corner coordinates and the pre-defined mathematical model of the cube, which is known as the Perspective-n-Point (PnP) problem.

Evaluated by several experiments, our device has shown important improvements over existing controllers. Our frame rate is 63.75 frames per second (FPS) on average and the mean estimation error is only one degree. In standard virtual object matching experiments, users needed only 2.79 seconds to finish one rotation task with our device, which represents the best performance so far for similar experiment settings.

Our system works robustly in various challenging environments. Firstly, we have developed an automatic lighting adjustment system and carefully chosen the colour model so that even dramatic changes in lighting do not affect the performance of our device. Secondly, the system is insensitive to occlusion. Up to four corners can be occluded under the condition that only a slight drop in accuracy is tolerated. Thirdly, our device is insensitive to background clutter and noise, even when some of the background objects have similar shapes or colours to the cube corners.

It is very simple to integrate our device with other display systems, such as stereo Fish Tank Displays, desktop environments and so on. The setup process is also very simple. Users need only install a regular camera (which is already available on most laptop or tablets), build the cube, install our software and run through a few automatic calibration steps. It requires almost no training to use our device since it is basically the same as interacting with a physical object.

References

[CHA17] Zeyuan Chen, Christopher G Healey, and Robert St Amant. Performance characteristics of a camera-based tangible input device for manipulation of 3d information. In Proceedings of the 43rd Graphics Interface Conference, pages 74–81. Canadian Human-Computer Communications Society, 2017.

Please note: this is a commercial profile

Zeyuan Chen

Ph.D. Candidate

Computer Science Department,

North Carolina State University

Tel: +1 607 379 8335

Christopher G. Healey

Professor

Department of Computer Science

Goodnight Distinguished Professor,

Institute for Advanced Analytics

North Carolina State University

Tel: +1 919 515 3190