Extended Reality (XR) is an umbrella term for advanced interactive systems such as Virtual Reality (VR), Augmented Reality (AR), Mixed Reality (MR), and systems with advanced 3D User Interfaces

These systems have emerged in various domains, from entertainment and education to combat training and mission-critical applications. XR systems typically involve a representation of a virtual world, are highly interactive and tend to be more immersive than other technologies.

Testing extended reality for quality assurance

Testing for quality assurance (QA) is crucial in developing these systems. However, testing of extended reality systems today is mainly done manually. Testers are assigned tasks crafted to validate parts of the system. This process requires a lot of human time and effort, making the testing overly expensive. Testing XR, is challenging because it requires testers to interact with a huge interaction space whilst also looking to ensure a positive user experience.

The challenges and costs arising from the common XR testing practices actively impede the growth of XR technology development and its adoption by a larger audience.

Horizon funding helping push technological advancements

The Intelligent Verification/Validation of Extended Reality Systems (iv4XR) is a project funded by the EU under the Horizon 2020 program with partners from academia and industry whose goal is to deliver an answer to the above challenges, demonstrable with real-world pilots. The proposed solution relies on the development of Artificial Intelligence testing agents to support the automation of extended reality testing, both in terms of functionality and user experience, reducing testing costs and opening up new testing possibilities.

Artificial Intelligence agents have been used for several years to model human cognitive behaviour. In lay terms, the agents can be programmed to make inferences about an environment. These inferences allow the agents to execute actions or sequences of actions to attain specific goals. Artificial Intelligence agents can actively pursue testing goals (e.g., find the fastest way to the door), adapt to change (e.g., if the path is blocked, the agent can find a new way to the door), allow intelligent coverage of the interaction space (e.g., find all possible ways to the door). They can be programmed to represent features of different user profiles (e.g., the person that likes to explore every possibility vs. the person that prefers reaching the goal as soon as possible).

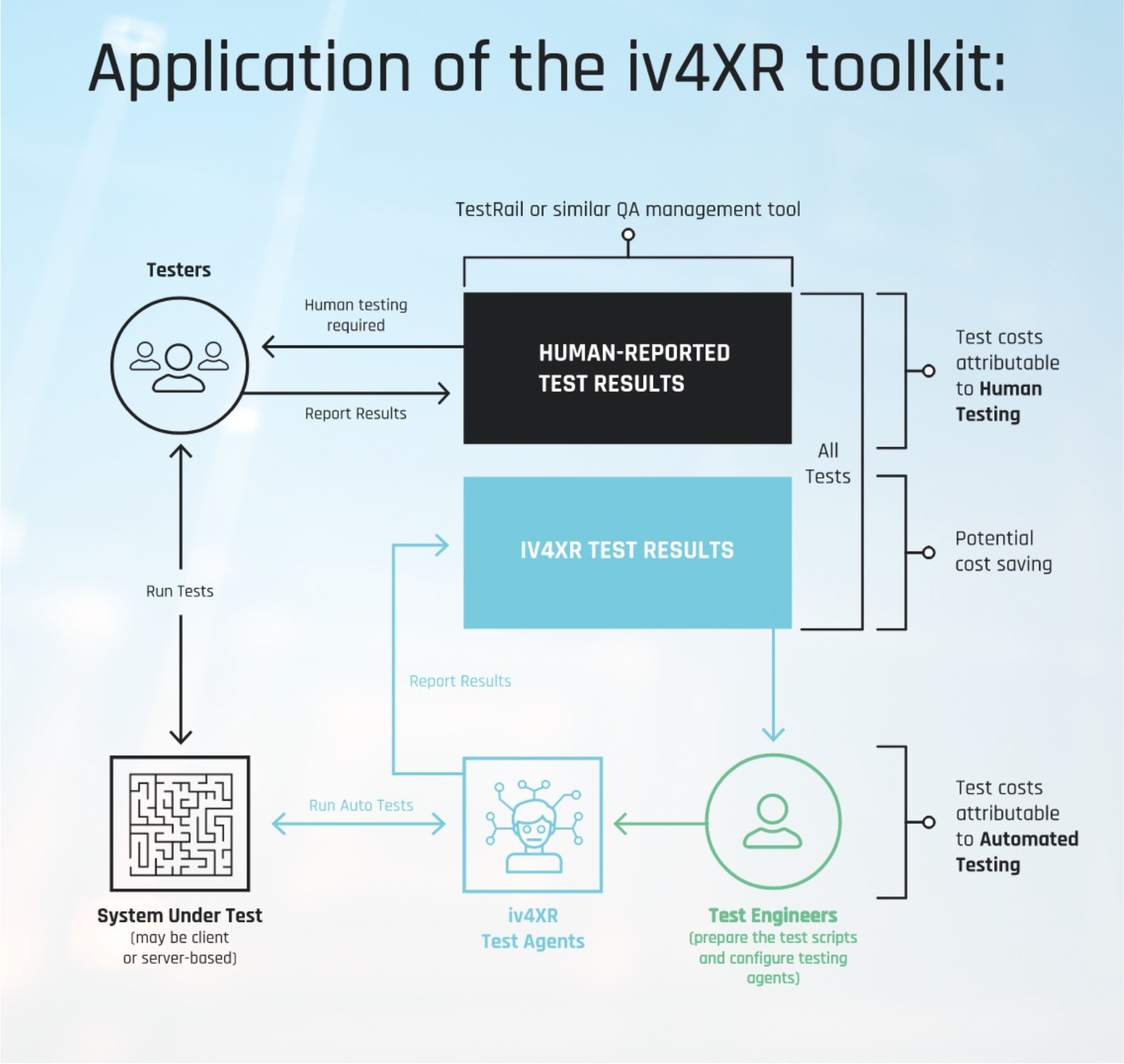

The iv4XR project developed an open-source testing toolkit with a core framework that facilitates the deployment of testing agents in a System Under Test (SUT) by providing an interface that allows the agents to understand and act on the system. To test the system, the user needs to specify a testing task that can be formulated by a set of goals (i.e., what the agent needs to achieve) and a set of tactics that guide the agent’s performance (i.e., the underlying orientation that constrains the type of inferences the agent makes).

Incorporating machine learning, exploration, and affective models

By incorporating machine learning, search and exploration, and model-based capabilities in the agents, the iv4XR toolkit offers extended versions of the testing agents. The current toolkit offers online goal-solving agents, which try to solve a specific goal by following a set of tactics; exploratory test agents for scriptless testing; model-based coverage and automated test case generation; and socio-emotional test agents for the testing of UX. The model-based capability allows good quality functional tests to be generated from a behavioural model of a system, or else, if no model is available, tests can be declaratively specified, and a test agent can use the online goal-solving capability to find.

A complex concept, UX encompasses multiple interconnected components, like user emotion, system difficulty, cognitive load, and others. The socio-emotional agents of the framework can use affective models for emotion prediction based both on data collected with human participants and on psychological models of emotion, along with models of cognitive load, difficulty estimation, and motion-sickness prediction in VR environments. These agents can, for example, explore a given environment and retrieve data on emotion variations across specific paths in the environment.

The solution has been developed and tested with three use cases developed by the three companies in the consortium. GoodAI presents a test case with the game Space Engineers, a complex multiplayer open-world game with volumetric physics. The intelligent testing agents have not only allowed for saving many hours of testers’ time but also engaged test engineers in different testing practices, requiring different strategies for defining tests.

Thales has been working on a simulation of a nuclear plant intrusion aiming to automate the process and improve the verification quality. What was once a manual procedure which would have involved several testers can now be performed by a single tester, as the agents can simulate different invasion techniques much faster.

Gameware tests a smart construction software that deploys sensors to monitor critical infrastructures. In this case, the testing agents provided additional diagnostics and enabled the identification of a significantly higher number of errors that were not found with the previous testing tools.

The future of supporting extended reality

In conclusion, agent-based testing promoted by the iv4XR project is a promising approach to automate testing of extended reality systems, which can save XR developers many costs, by supporting faster development sprints that shorten the time a product needs to reach the market. The creation of automation of testing toolkits can be a crucial step for fostering the innovation of the XR industry and the adoption of XR technology.

This work is licensed under Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International.