AI suffers from inaccurate, numerically unvalidated calculations which lead to randomly accurate and unreliable results. Accuracy is the deviation from a True Value which Inora Technologies OICT Provides

“We cannot solve our problems with the same level of thinking that created them” (Albert Einstein)

The foundation of intelligence is the accurate and reliable processing of information. Even though artificial intelligence (AI) currently has significant hype, we believe AI is not intelligent. This is, unfortunately, true because the calculations within the AI completely lack the ability to numerically evaluate complex data accurately.

AI and, specifically, large language models (LLMs) base their calculations on neural networks. These networks “learn” to orient themselves within data sets by randomly making internal adjustments until the results “look good” to subjective human judgment.

The AI process corrodes data fidelity and eliminates any chance of proper understanding. Not even the creators of LLMs know by which means these systems come to a conclusion (see recent articles from Computerworld and The New York Times). For all of these reasons, our view is that the results from AI (Machine learning or whatever other name it may go by) are inaccurate and uncertain. However, there is a way out of the AI trap, thanks to Inora.

Introducing Inora Technologies

At Inora Technologies, as the INstitute for Optimization, Regulation and Adjustment, it is our responsibility to call out AI for what it really is, a hoax.

Isaac Newton recognized that “Nature is Pleased with Simplicity and Nature is No Dummy”. Humans, other living organisms, and nature are intelligent because they intuitively and instantly process information in correlation with the actual value of equilibrium. A “strength” touted by AI, the memorization of information, is merely a secondary tool, not a substitute for true intelligence.

Furthermore, AI shows that the further one deviates from what it knows, the clearer it becomes that AI is not intelligent at all. However, the root problem cannot be cured by more training. To properly train a machine, every possible real-world combination of scenarios and variables would have to be perfectly mapped out, which is an infinite/impossible task. Additionally, flawed data pre-processing techniques, such as normalization and filtering, have fatal and devastating effects, making AI results inaccurate and uncertain. AI cannot fix itself because of the numerical inabilities and deficiencies it is built on.

Inora respects the programmers who try to make AI work. However, we challenge leaders who need to be made aware of first principles in logic and mathematics, prioritizing hype over reliable, correct, and safe results.

Solving AI and current data analysis methods

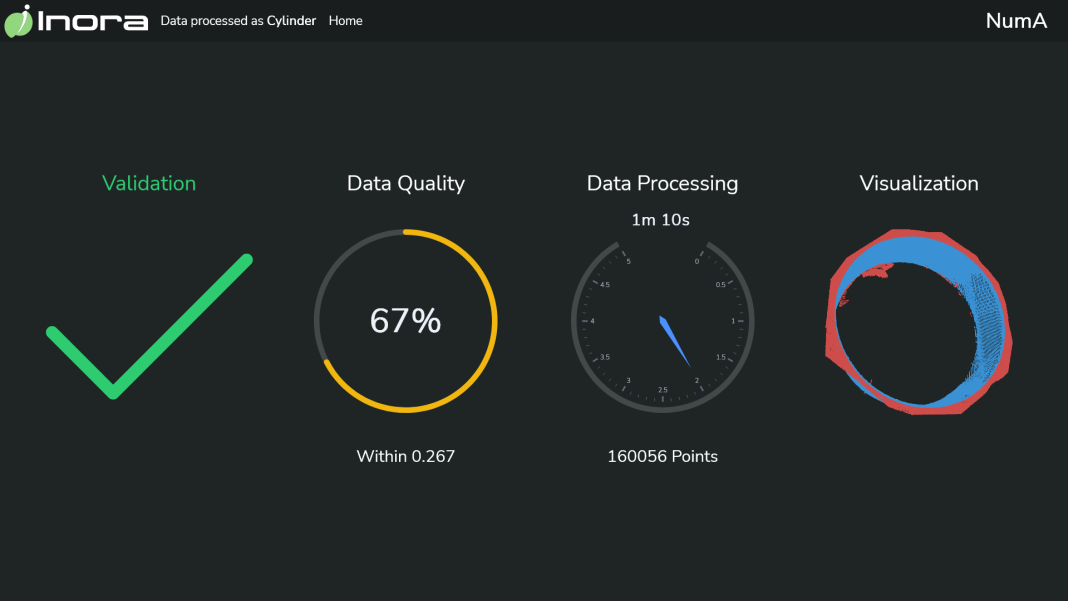

Inora’s Organic Intelligence Core Technology (OICT) solves the core problems of AI and current data analysis methods.

OICT was created on the logic that numerical accuracy can be achieved in any complex data evaluation. It took decades of scientific research to overcome the mathematical obstacles that Einstein refers to in his book Sidelights on Relativity: “As far as the laws of mathematics refer to reality, they are not certain; and as far as they are certain, they do not refer to reality.” The problem pointed out here is the inability of traditional mathematical approaches to determine real-world data sets with absolute accuracy, which would allow for determining reality with certainty.

There is absolutely perfect geometry in everything. If we observe perfect results, we know we have a true value in our calculations. Inspired by this Newton viewpoint, the inventors of OICT built a numerical platform that provides universal and absolute perfect geometry in any raw data set. When calculating raw data sets, there can be a host of numerical challenges, such as various coordinate systems, units, quantitative vs. qualitative, numerical singularities, heteroscedasticity, hidden/ latent restrictions, linear vs. non-linear, etc. OICT developers solved all required fundamental numerical challenges. The challenges were dealt with in a logical order with painstaking diligence. Most numerical problems and their implications have been published (e.g. Eric W. Grafarend, Linear and Non-linear Models, Fixed Effects, Random Effects and Mixed Models, De Gruyter Book).

A logical, understandable, and reliable Intelligence

The OICT developers have created a logical, understandable, and reliable Intelligence that relies on known mathematical principles and verifies results that solve the problem AI has created.

After overcoming the numerical challenges, perfect geometry can only be achieved on a universal, neutral numerical foundation. OICT uses a Grassmann coordinate system plus two additional key elements to accomplish this task.

The first element OICT delivers is finding the true center point of any data set,

as it is unique and perfectly defined. Determining the center requires calculating the geometrical weight relationship of all raw data in a point cloud without the influence of perspective, spatial orientation issues, and inaccuracy from assumptions. The center cannot be properly found when based on an assumed model (e.g., neural networks). It must be based on perfect symmetry. It is very simple; with no true center, there is no perfect geometry.

The second element of OICT stems from Newton’s third law. “For Every Action, there is an Equal and Opposite Reaction.” Starting in one dimension, this is true for any number (8=8, therefore 8×1/8=1). In simple words, the perfect symmetry of a number equaling itself is proven by the multiplying the number with its inverse, which in OICT is called numerical balancing. Moving from 1-D to n-D, the same is true for Matrix calculations (unity matrix = input matrix x inverse matrix), which is a key component of OICT.

The center point and numerical balancing are only reliably determinable if they are numerically validated using at least one independent method that was not originally used to calculate the results. The validations are many and more complex in matrix calculations. OICT applies a Primal-Dual Principle across all calculations; one good example is the Ansermet validation (A. Ansermet, 1945).

The reward for diligently following first principles is the overwhelming joy of discovering that the accurately calculated unity matrix, more specifically the balance points between the original and its inverse, always forms a perfect (no residuals) geometrical shape, a hyper-ellipsoid. This scientifically establishes the existence of an Inner Reference in everything, a true value representing the perfect balance between one thing and its inverse in n-dimensions. OICT can do these calculations on a standard CPU without training. Comparatively, the data computation of LLMs is massive, requiring specialized supercomputers and days, weeks, and months of training for inferior and uncertain results.

OICT is embedded in products such as InoraSRS and InoraASYS, providing certain results for manufacturing where current products and processes are uncertain (e.g., measurement uncertainty). Therefore, non-OICT process capability and product quality judgment are unreliable, costing the aerospace, automotive, and military industries time, money, scrap/rework, and, in extreme cases, human life.

Inora helps industry and science by providing capsulated OICT software components. These modules can be integrated into any existing program or infrastructure. For the first time, this elevates current random accuracy and false positive/negative results to absolutely accurate, reliable results. This is the key to true and ultimate efficiency. Inora is uniquely positioned as the only company to provide truly intelligent results in data analysis amidst AI’s hype and false claims.