Kensuke Harada from Graduate School of Engineering Science, Osaka University, provides an introduction to and analysis of a move towards artificial intelligence (AI)-based technology in industrial robots

One of the areas where there are the greatest expectations for robots to replace human labour is in the manufacturing process of products in factories. However, as often depicted in movies such as “Modern Times,” the fact that product manufacturing in factories is harsh for humans remains a severe problem even today when science and technology have advanced.

This is especially true in the assembly and inspection processes of a high-mix and low-volume production. Robot-isation of these manufacturing processes requires frequent change-overs, which makes robotisation difficult.

Many robotics researchers have challenged the problem of replacing human tasks with robots for a long time, but robots have replaced only a small portion of human tasks. This fact seems strange since humans can perform tasks with their hands without difficulty in their daily lives. The only tasks that humans find difficult are those that require subtle force and detail, while other tasks also seem to be easy for robots. In Penfield’s Homunculus diagram, the relationship between the motor and somatosensory cortices and body parts, the five fingers and the palm occupy one-third of the motor cortex and one-quarter of the sensory cortex, and the hand and fingers are called the second brain.

This suggests that the functions of the hand and fingers that we realise without thinking are actually realised based on the enormous amount of information stored in the brain. In other words, if robots are to replace human tasks, the key to research is how to efficiently realise the information stored in the brain by robots. Studying the functions of the hands and fingers of robots is a profound problem that directly relates to the study of human intelligence.

This article presents our research on machine learning and motion planning, which is intended to be applied to industrial robots. Such research is expected to create next-generation production businesses, such as variable-volume production and robot- as-a-service (RaaS).

Acquisition of human work behaviour

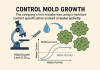

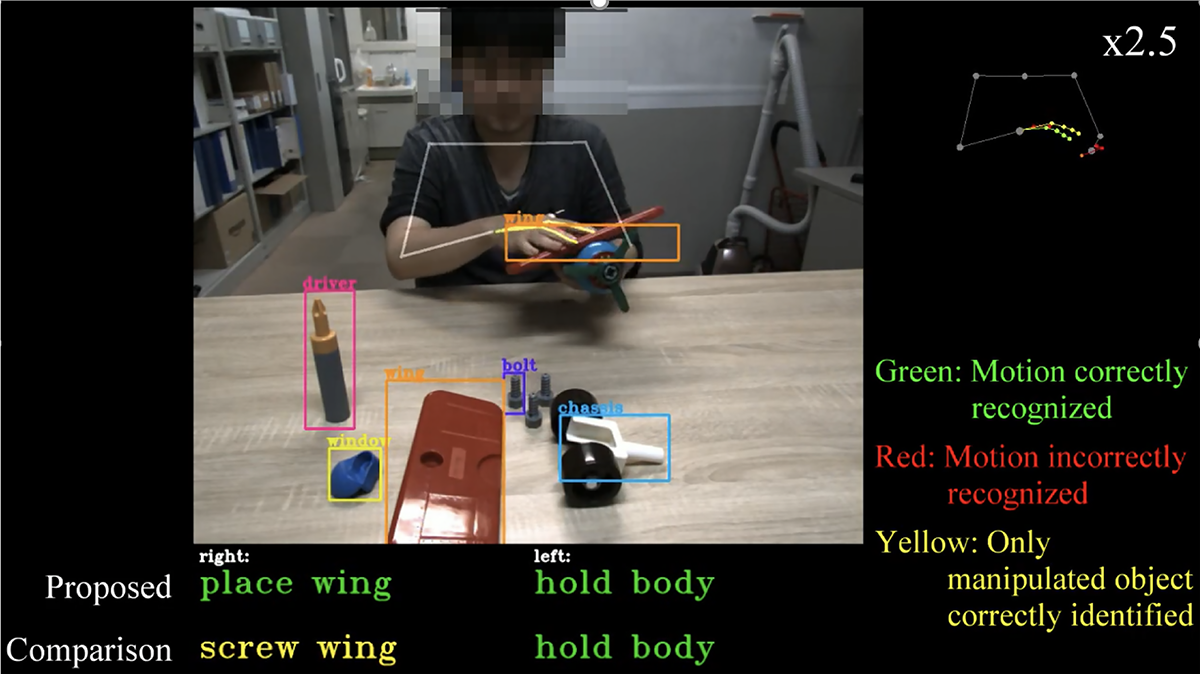

One strategy for robotising manufacturing processes is to acquire human work behaviour. (1) The analysis of human work behaviour is indispensable for the automation of tasks by industrial robots. In our laboratory, we segment the human work behaviour on the time axis and identify the type of work performed.

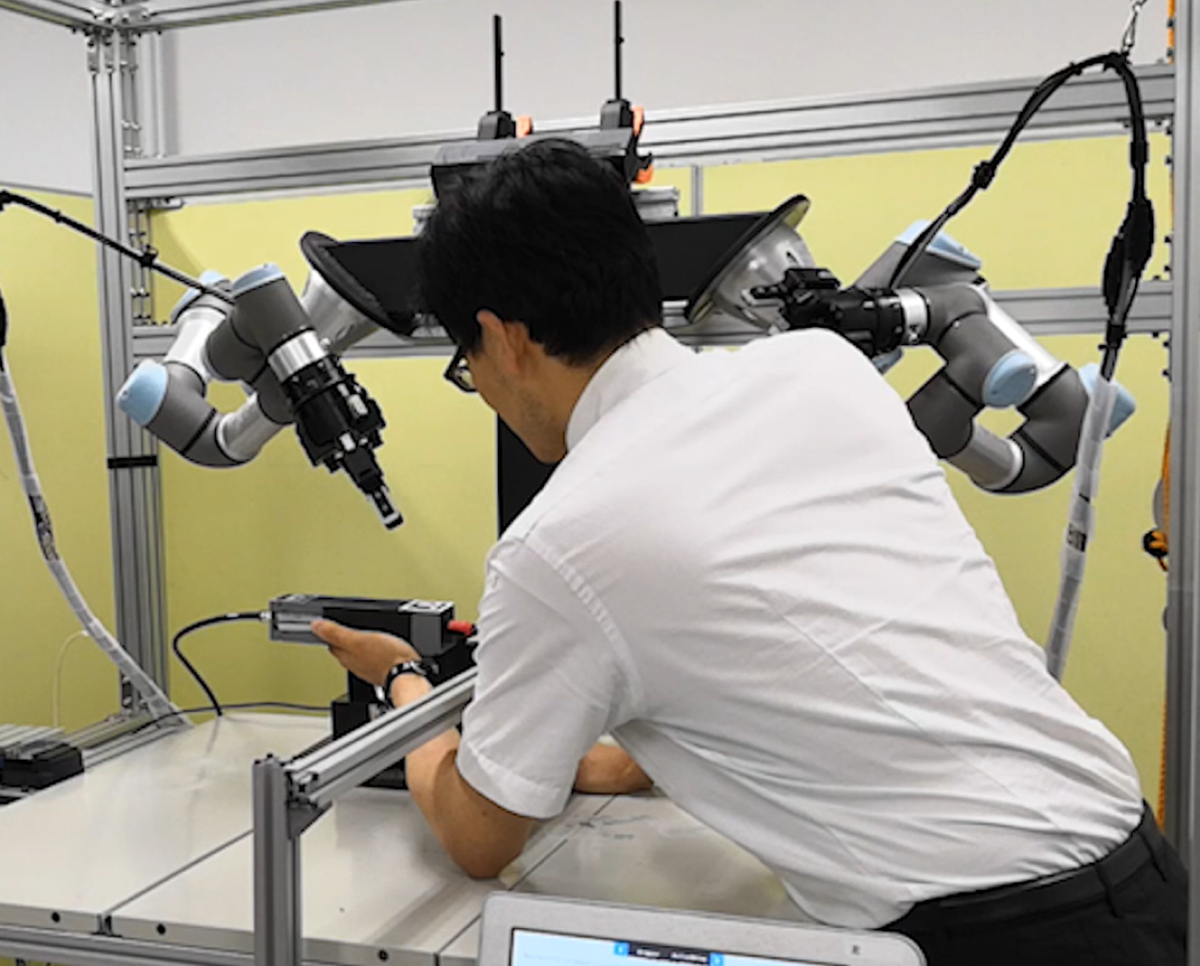

For this problem, we propose a method that uses the information of the object that the human is grasping. Figure 1 shows a human assembling a toy for a child. For example, if the objects grasped by the human are a bolt, a wheel and a nut, we construct a hidden Markov model for each of them and identify the action based on the grasped object. By doing so, we can narrow down the variety of actions and increase the success rate of recognition.

Task and motion planning

To automate the assembly process of high-mix, low-volume production using industrial robots, the key to success is how easy it is to generate robot motions. To achieve this goal, research has been conducted on motion planning to automatically plan the motions of industrial robots for assembly and other tasks.

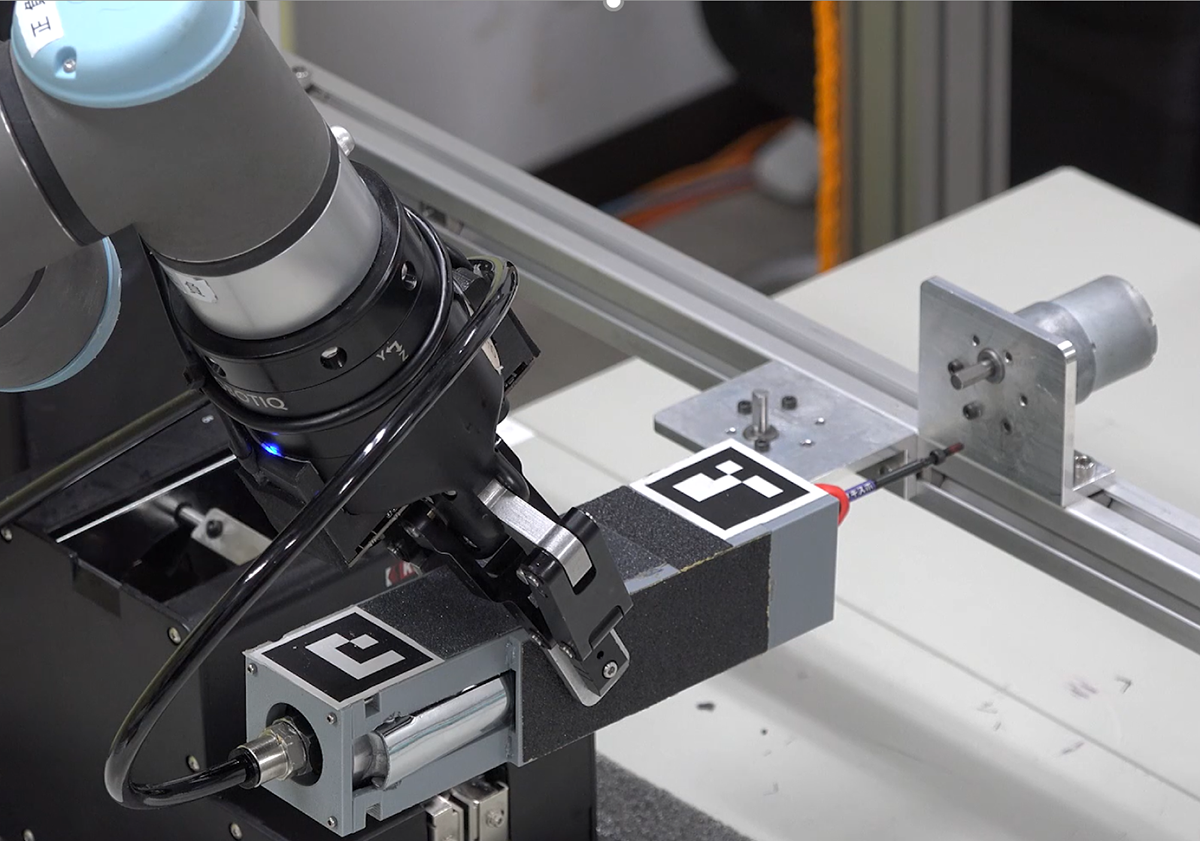

This section introduces a motion planning system for robot manipulation of a tool based on a human demonstration. (2) First, a human operator grasps a tool to perform a task, and a sequence of the tool’s position and posture is saved as a goal of the motion plan. Next, we divide the work into child tasks based on the stored target values. In addition, we calculate the posture of the gripper that grasps the tool by the grasp plan. Based on these results, the robot plans the motion to change the tool from one hand to another or to place the tool on the table and re-grasp it.

The motion planning of the robot takes the output sequence of postures and plans the detailed motions between sequences. In Fig. 2, a human operator manipulates the screw tightening tool and teaches the robot the movements of the screw tightening tool. Based on the results of this instruction, the robot plans and executes the screw tightening operation using the tool.

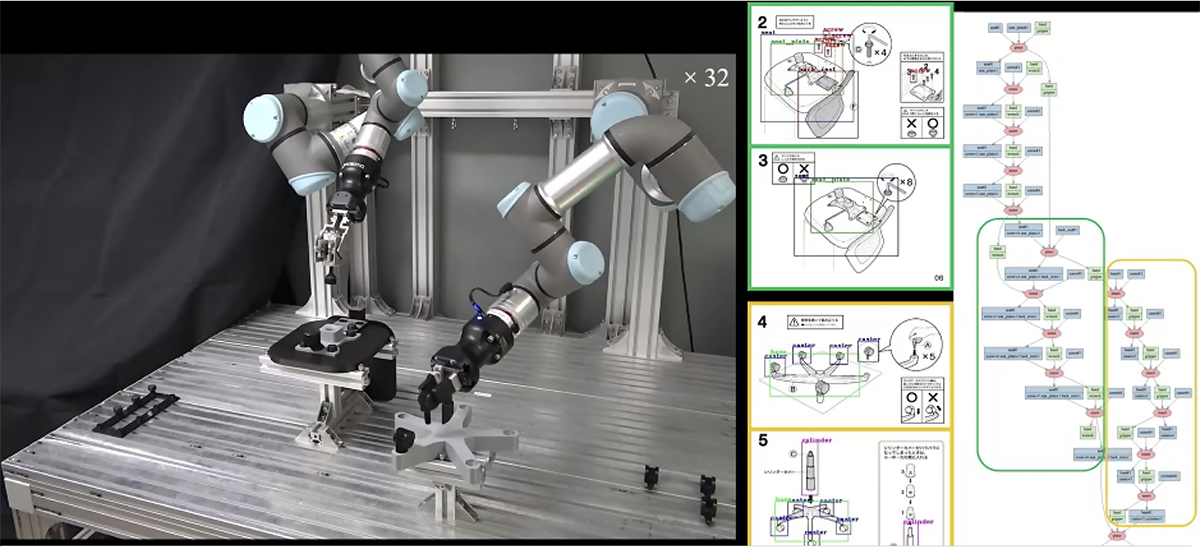

Next, we are researching how to automatically analyse an assembly manual and convert it into robot motions rather than teaching a task through a human demonstration. (3) When a person purchases furniture from IKEA or Nitori, they assemble the furniture while looking at the assembly manual. Fig. 3 shows the assembly work plan based on the assembly manual of a chair from Nitori (4) and the actual assembly work of the robot. Here, the robot recognises which parts are listed in which frames from the diagrams in the assembly manual, estimates the work operations required to assemble the parts, and constructs a graph structure called the Assembly Task Sequence Graph based on these data. Once this graph structure is built, the robot can automatically plan and execute assembly tasks based on the graph structure.

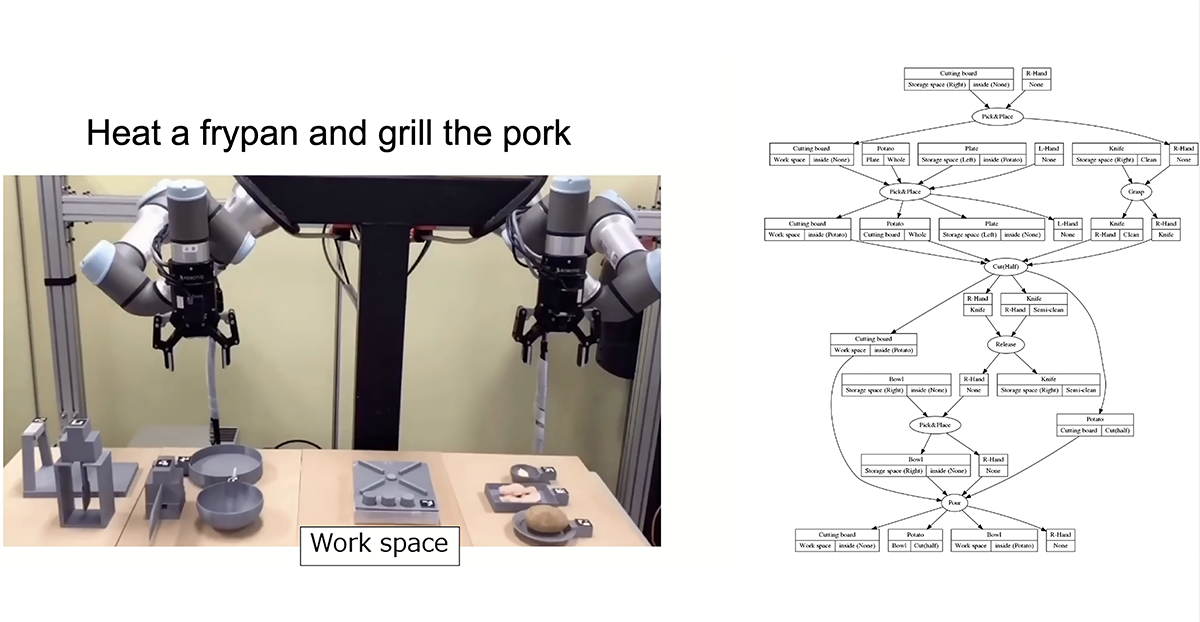

We have also researched planning cooking actions based on cooking recipes instead of assembly manuals and executing cooking actions based on these plans. (5) Unlike the assembly manual, the cooking recipe is basically a verbal instruction. Another feature is that there are more preparation actions, such as taking cooking utensils from the shelf than assembly actions. Here, a graph structure similar to the Assembly Task Sequence Graph described earlier is constructed by analysing cooking recipes, and work planning is performed from this structure.

Figure 4 shows the result of planning the robot’s task based on the instruction “heat a frying pan with oil over high heat and fry pork”. In this case, the robot needs to perform preparatory tasks such as picking up the frying pan and placing the pork on the pan. The robot automatically completes the preparatory tasks by first constructing a graph element and then determining what is missing in the graph element. Finally, the robot can perform the tasks described in the cooking recipe.

Summary

In this article, as examples of industrial robots using AI technology, we have focused on learning-based grasping tasks, recognition of human work actions, and work action planning. Future challenges include further consideration of the uncertainty of the environment and objects and building a system that can flexibly respond to perform procedures.

References

- K. Fukuda, N. Yamanobe, I.G. Ramirez-Alpizar, K. Harada, “Assembly Motion Recognition Framework Using Only Images,” Proceedings of IEEE/SICE International Symposium on System Integrations, pp. 1242-1247, 2020.

- AIST Press Release, 2019.

- I. Sera, N. Yamanobe, I.G. Ramirez-Alpizar, Z. Wang, W. Wan, K. Harada, “Assembly Planning by Recognizing a Graphical Instruction Manual,” Proceedings of IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 3115-3122, 2021.

- Nitori official online store, work chair. https://www.nitorinet.jp/ec/cat/Chair/WorkChair/1/

- K. Takada, N. Yamanobe, I.G. Ramirez-Alpizar, T. Kiyokawa, K. Koyama, W. Wan, and K. Harada, “Task planning for robots based on verbal instructions and food images,” Proceedings of the 22nd System Integration (SI2021), 2021.

*Please note: This is a commercial profile

© 2019. This work is licensed under CC-BY-NC-ND.