New technology is helping computer systems learn to accurately fill in the gaps in high-resolution facial images – and offer users multiple options for customisation

When we look at an incomplete object with some missing parts, our visual system can immediately fill in the gaps and perceive the object as being whole. In psychology, this is known as the law of closure.

Perceptually completing objects seems to be an intuitive task for human observers, but it can be very challenging for a computer system.

We investigate the problem of constructing completion models for facial images both efficiently and effectively and at high resolutions.

Given samples drawn from an unknown data-generation process, the goal of completion models is to learn the underlying data distribution so that when some samples are corrupted, a trained model can recover the missing data and generate completed samples that are indistinguishable from real ones.

Completion models can be applied to various areas, such as dialogue analysis, audio reconstruction etc. Image completion, in particular, is an important field of completion models, not only because it has many practical applications but also because it is a challenging task due to the high-dimensional data distribution of images.

With the rapid development of social media and smartphones, it has become increasingly popular for people to capture, edit and share photos and videos.

Sometimes, data is “missing” in the pictures or video frames and we need a system that is able to learn ways to generate the missing contents and complete images, with user-chosen constraints, from an initial set of exemplary images.

For instance, faces can be occluded by dirty spots on a camera lens. Users may want to remove unwanted parts from images, such as whelk or dark eye circles.

Finally, before sharing images, many users prefer replacing parts of their faces (e.g. eyes or mouths) with more aesthetic components so that the modified images look more attractive or have more natural expressions.

Image completion is a technique to replace target regions, either missing or unwanted, of images with synthetic content so that the completed images look natural, realistic and appealing.

Image completion can be divided into two categories: generic scene image completion and specific object image completion (e.g. human faces).

Due to the well-known compositionality and reusability of visual patterns, target regions in the former usually have a high chance of containing similar patterns in either the surrounding context of the same image or images in an external image dataset. Target regions in the latter are more specific, especially when large portions of essential parts of an object are missing (e.g. facial parts in Figure 1).

So, the completion entails fine-grained understanding of the semantics, structures and appearance of images and this is a more challenging task.

Face images have become one of the most popular sources of images collected in people’s daily lives and transmitted on social networks. Much progress has been made since the recent resurgence of deep convolutional neural networks (CNNs), especially the generative adversarial network (GAN). Data distribution-based generative methods learn the underlying distribution governing the data generation with respect to the context. We address three important issues in our work.

First, previous methods are only able to complete faces at low resolutions (eg 128×128).

Second, most approaches cannot control the attributes of the synthesised content. Previous works focused on generating realistic content. However, users may want to complete the missing parts with certain properties (eg facial expressions).

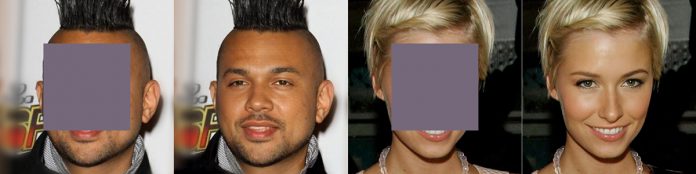

Figure 1: Face completion results of our method on CelebA-HQ. Our model directly generates completed images based on the input contextual information, instead of searching for similar exemplars in a database to fill in the “holes” like traditional methods. Images in the left most column of each group are masked with grey colour, while the rest are synthesised faces.

Top: Our approach can complete face images at high resolution (1024×1024).

Bottom: The attributes of completed faces can be controlled by conditional vectors. Attributes (“Male”, “Smiling”) are used in this example. The conditional vectors of column two to five are [0, 0], [1, 0], [0, 1] and [1, 1] in which ‘1’ denotes the generated images have the particular attribute while ‘0’ denotes not. Images are at 512×512 resolution. All images best viewed enlarged.

Third, most existing approaches require post-processing or complex inference processes. Generally, these methods synthesise relatively low-quality images from which the corresponding contents are cut and blended with the original contexts. In order to complete one image, other approaches need to run thousands of optimisation iterations or feed an incomplete image to CNNs repeatedly at multiple scales.

To overcome these limitations, we introduce a novel approach that uses a progressive GAN to complete face images in high resolution with multiple controllable attributes (see Figure1).

Our network is able to complete masked faces with high quality in a single forward pass without any post-processing. It consists of two sub-networks: a completion network and a discriminator.

Given face images with missing content, the completion network tries to synthesise completed images that are indistinguishable from uncorrupted real faces, while keeping their contexts unchanged.

The discriminator is trained simultaneously with the completion network to distinguish completed “fake” faces from real ones. Unlike most existing works that use the Encoder-Decoder structures, we propose a new architecture based on the U-Net that better integrates information across all scales to generate higher quality images.

Moreover, we designed new loss functions, inducing the network to blend the synthesised content with the contexts in a realistic way.

Additionally, the training methodology of growing GANs progressively is adapted to generate high-resolution images. Starting from a low resolution (i.e. 4×4) network, layers that process higher-resolution images are incrementally added to the current generator and discriminator simultaneously.

A conditional version of our network is also proposed so that appearances (e.g. “Male” or “Female”) and expressions (eg smiling or not) of the synthesised faces can be controlled by multi-dimensional vectors (Figure1).

In experiments, we compared our method with state-of-the-art approaches on a high-resolution face dataset CelebA-HQ. We showed that our system can complete faces with large structural and appearance variations using a single feed-forward pass of computation with mean inference time of 0.007 seconds for images at 1024×1024 resolution. The results of both qualitative evaluation and a pilot user study showed that our approach completed face images significantly more naturally than existing methods, with improved efficiency.

References

[CNWH18]

Zeyuan Chen, Shaoliang Nie, Tianfu Wu and Christopher G Healey.

High-resolution face completion with multiple controllable attributes via fully end-to-end progressive generative adversarial networks. arXiv preprint arXiv:1801.07632, 2018.

Please note: this is a commercial profile

Zeyuan Chen

PhD Candidate

Computer Science Department,

North Carolina State University

Tel: +1 607 379 8335

Christopher G. Healey

Professor, Department of Computer Science; Goodnight Distinguished Professor,

Institute for Advanced Analytics

North Carolina State University

Tel: +1 919 515 3190