Hailey Coverson, Data Scientist at Bayezian, discusses the complexities of genomic data, accounting for biases and inaccuracies and AI’s potential in supporting clinical decisions in breast cancer care

Breast cancer is a complex disease. It is also the most prevalent cancer. The World Health Organization reports there were 2.3 million new cases in 2020 and, as of the end of the year, 7.8 million women had been diagnosed in the previous five years.

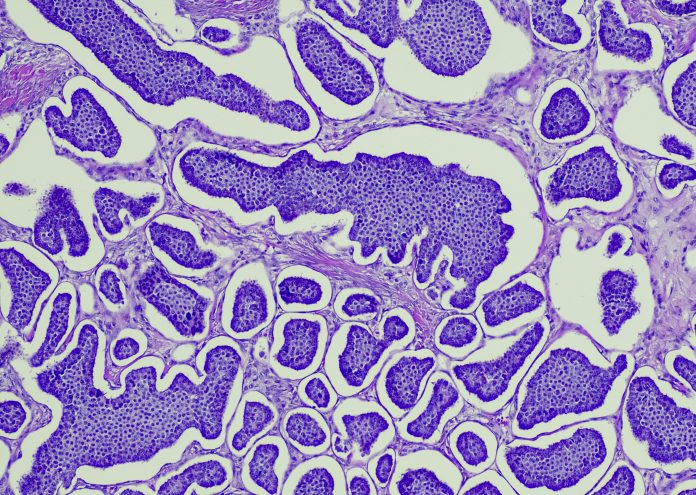

Its complex nature makes it very hard to treat with a blanket approach. This is because common therapies rely on the molecular subtype of breast cancer tissue, marked by specific genetic profiles. There are five common subtypes, with Luminal A the most common and accounting for ~70% of tumours and the triple-negative subtype holding the worst prognosis due to its aggressive nature and lack of targeted therapies.

But in August of last year, a major trial by Lund University showed that using AI for breast cancer screening could work as well as two radiologists, effectively halving the workload. Forty-one more cancers were detected, and no more false positives were produced compared with standard screening.

The technology has extraordinary potential to support decision-making in clinical practice by generating insights from large datasets, a potential that has been enhanced by the healthcare systems’ global adoption of electronic health records (EHR).

EHRs have been used to create a data warehouse on diagnoses, treatments, and patient outcomes, allowing researchers to train and test Machine Learning models on evolving real-world data. These advancements are allowing for the adoption of personalised healthcare, which best accounts for each individual’s needs and reduces patient mortality.

Now, pioneering trials are combining data engineering techniques with regression Machine Learning models to predict breast cancer survival durations in patients. In doing so, there is potential to develop a tool that can help clinical practitioners decide on the best course of treatment to maximise prognosis in breast cancer patients (given their personal medical history and genetic makeup).

But just as breast cancer is complex, so too is using AI in genomics. There can be a host of problems with biases within the models, missing data, and assumptions models make on genomic data. So, how do you create models that meet the clinical standard necessary to be used for patient care?

The complexities of genomic data

Like many fields in medicine and healthcare, the use of genomic data is rapidly advancing due to the integration of new technologies. Such is the pace of progress that data collated today can shed light on areas considered unanswerable even two or three years ago.

However, more questions need to be asked, as biological systems are massively complex, and making sense of them requires enormous datasets. Even now, scientists are still unearthing the vast amount of genetic variation in the human population. The ability to sequence DNA has far outpaced scientist’s ability to decipher the information it contains, so genomic data science is set to remain a fascinating field of research for many years to come.

That being said, its complexities must not be forgotten. Ethical responsibilities should remain at the forefront of research projects, noting that each person’s sequence data is associated with issues related to privacy and identity.

Accounting for biases and inaccuracies

Despite rapid progress, researchers must consider the chance of hidden biases and errors in a dataset. Disparities emerge when distinct variations in measured values coexist alongside each other. Within genomic data specifically, this phenomenon can arise from varying expression profiles of different biological structures. Of course, being genomic, there are countless variations.

As a result, challenges arise with maintaining consistent distributions between test, train, and validation datasets and producing accurate predictions. These distributions must be labelled with the associated explanatory variable so the model can effectively account for these nuances.

Similarly, the presence of missing data can also contribute to distributional issues due to the omission of data points lying between distributions. Additionally, data may be omitted within distributions, resulting in underrepresented groups. These scenarios diminish the statistical power of a model and amplify its risk of bias.

The intricacies of managing missing data are undoubtedly challenging, and a universal approach to dealing with it does not exist. It is best to use complete datasets, although even the most extensive datasets are bound to have a degree of missingness. Overall, it’s a tricky balancing act which scientists are still trying to get right.

AI’s potential for breast cancer

AI is particularly revelatory in the field of genomic data because it can glean and analyse large datasets far quicker than humans are able to. This opens up a great number of possibilities, including the hope that breakthroughs are made at a more frequent, consistent rate.

However, as referenced previously, the data being utilised must be correct, with accuracy and robustness paramount. Researchers must recognise the profound impact that decisions made regarding breast cancer treatment, as well as other areas of healthcare, can have on individuals’ lives.

Therefore, the tools employed to facilitate such decisions must exhibit unwavering reliability. The scope of what can be achieved by integrating AI throughout healthcare research and strategy is breathtaking. While it won’t fully replace the role of medical professionals, it has a part to play in supporting decision-making and analysing genomic data in ways we have never seen before.

To offer the best care, however, tackling biases and inaccuracies in datasets must remain a priority. Despite the future-facing pace of new technologies and their exciting potential, the onus must be on patient care, which must protect both privacy and identity with the greatest of results.