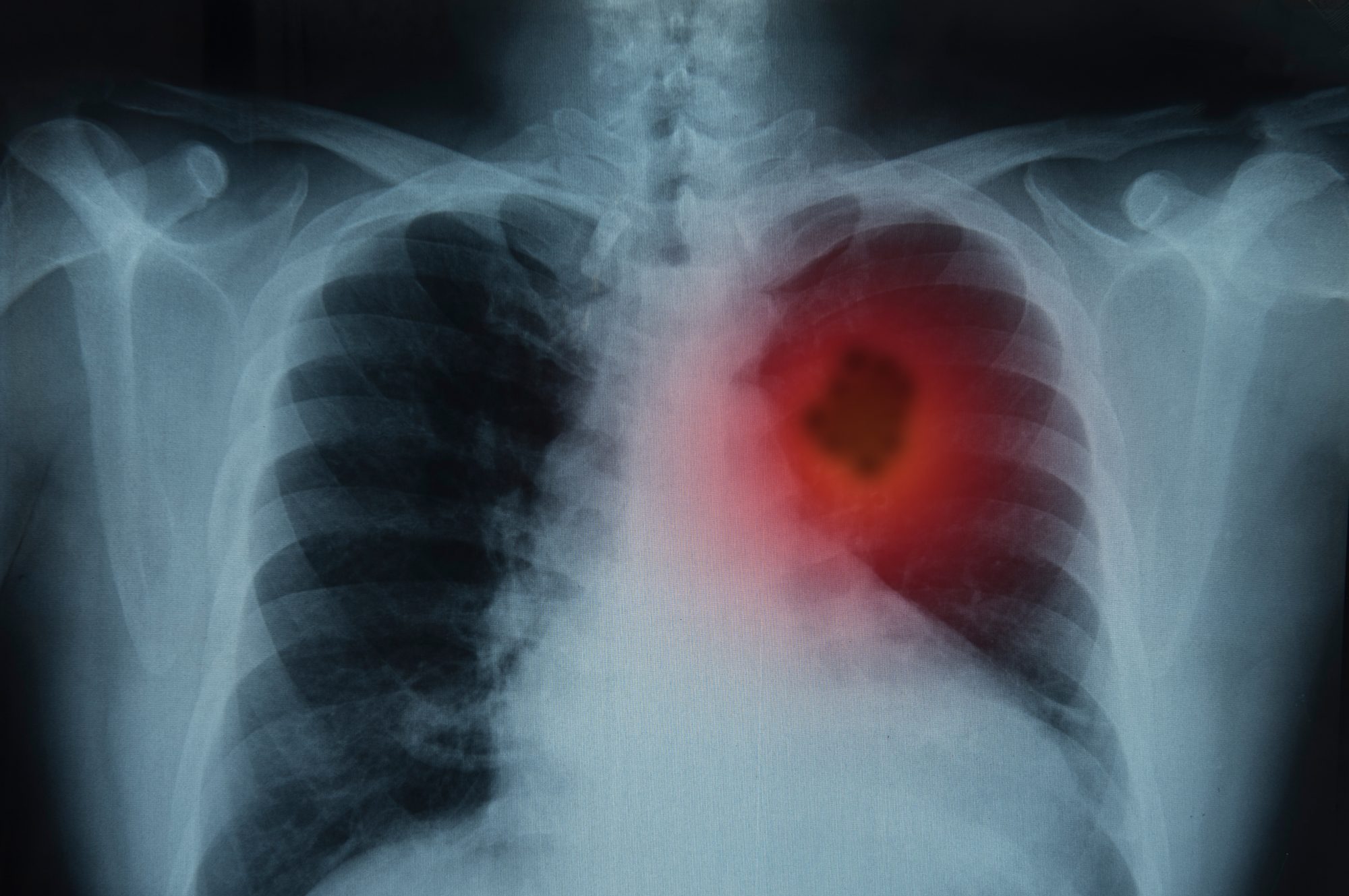

Researchers discover AI model for cancer diagnosis that is expected to revolutionise detection

Professor Park Sang-Hyun of the Department of Robotics and Mechatronics Engineering has led a research team in developing a weakly supervised deep learning model that can accurately locate cancer in pathological images based only on data where the cancer is present.

What do existing AI models for cancer diagnosis look like?

Existing deep learning models needed to construct a dataset to specify the cancer site whereas the model developed in this study improved efficiency. It is hoped that it will make a significant contribution to the relevant research field.

Generally, locating the cancer site involves zoning which is time-consuming and increases cost so the new AI model looks to be promising.

The weakly supervised learning model that zones cancer sites with only rough data such as ‘whether the cancer in the image is present or not’ is under active study.

However, if the existing weakly supervised learning model is applied to a huge pathological image dataset where the size of one image is as large as a few gigabytes the performance would significantly deteriorate.

How did researchers solve existing issues?

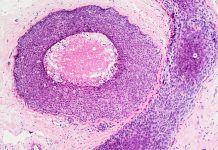

Researchers wanted to solve this issue and so attempted to improve performance by dividing the pathological image into patches. However, divided patches lose correlation between the location information and each split data, which means that there is a limit to using all of the available information.

Professor Park Sang-Hyun’s research team came up with a technique of segmenting down to the cancer site solely based on the learned data indicating the presence of cancer by slide.

They discovered a solution. They developed a pathological image compression technology that first teaches the network to effectively extract significant features from the patches through unsupervised contrastive learning and uses this to detect the main features while maintaining each location information to reduce the size of the image while maintaining the correlation between the patches.

A later discovery was a model that locates the regions that are highly likely to have cancer from the compressed pathology images by using a class activation map. It is able to zone all of the regions that are highly likely to have cancer from the entire pathology images using a pixel correlation module (PCM).

The newly developed deep learning model showed a dice similarity coefficient (DSC) score of up to 81 – 84 only with the learning data with slide-level cancer labels in the cancer zoning problem.

It significantly exceeded the performance of previously proposed patch-level methods or other weakly supervised learning techniques (DSC score: 20 – 70).

The future of AI models for cancer diagnosis

Professor Park Sang-Hyeon Park adds: “The model developed through this study has greatly improved the performance of weakly supervised learning of pathological images, and it is expected to contribute to improving the efficiency of various studies requiring pathological image analysis.

“If we can improve the related technology further in the future, it will be possible to use it universally for various medical image zoning issues.”

This study has been published in MediIA (Medical Image Analysis Journal).