John Yardley dives into a philosophical question at the heart of our AI obsession: Do we care about how Turing defined “Machine Intelligence”, and should we?

These days, we seem to be bombarded with news about the growth in artificial intelligence.

Much of this seems to be due to the concern that millions of humans will be put out of work by machines – something that has happened continuously since the invention of the plough. Some of it is undoubtedly due to the computer industry’s influence in trying to encourage organisations that are not using AI, to buy their services.

But before we assume we have missed the AI boat, perhaps we should think about what AI really means, how it affects us and how it can both benefit and disadvantage us.

The definition of “Machine Intelligence”

When Alan Turing first coined the phrase “Machine Intelligence” he proposed that if a human could be convinced that a machine was another person, then that machine could be described as “thinking”. In order to test that, one would need to abstract the physical characteristics of the machine since, good as they are, robots are still not very convincing humans.

Turing proposed doing this by using an intermediary to mediate between each party. In modern days, this could be achieved simply using a computer terminal. For example, if you had a Skype conversation with a machine and at the end of the conversation you were convinced you were Skyping another human, then the machine could be said, by Turing at least, to be thinking. We could argue over whether thinking means intelligent, or machine means artificial, but Turing was describing the phenomena we generally accept as artificial intelligence.

It doesn’t matter what is at the other end of the terminal. If it fools a human, it can be said to be intelligent. And this is the real crux of the matter. Something as simple as a thermostat would, at some point in history, have appeared to be intelligent because most people could not conceive of a situation where the temperature of a home could be controlled by anything other than a human regulating the amount of fuel put on a fire. It’s all relative.

When did we forget about Turing’s definition?

Over the last 20 years, we seem to have lost sight of what Turing originally meant. We now tend to describe AI not by its behaviour, but by its implementation.

Let’s take the process of using a computer to convert text to speech as an example. Unlike English, the Italian language is very consistent. Notwithstanding the issue of making the computer sound like someone other than Stephen Hawking, the way a word is pronounced is completely specified by its spelling, so it is possible to write all the rules to do this. In English on the other hand, spelling doesn’t always give us a clue as to how a word should be pronounced. “Bow” and “bough” are two words a non-native speaker might have difficulty in distinguishing.

In writing a computer program to convert English text to speech, we cannot rely on rules to convert words into sounds – instead, we would have to look up the accepted pronunciations in a table. Some academics would call the rules intelligent and the table dumb. And some would argue the opposite. But to the listener, it really is academic. As long the computer is doing what the listener expects of a human, it is acting intelligently. Indeed, even if a bank of humans is converting the speech into text, then provided it is done quickly, the listener won’t care.

In the grand scheme of things, it may not matter how we define artificial intelligence because words and phrases change their meaning all time – does it matter whether we call the computer intelligent or not?

It does matter, however, because some companies have vested interests in convincing us that we should care about the process rather than the outcome simply because they want us to buy their processes.

Can computers think like humans?

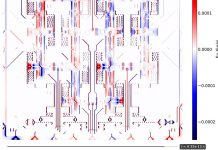

Neural networks are a good example. Loosely speaking, a neural network is a computer program that, in some measure, emulates a human brain and the way it learns. In many cases, the human is not aware of the rules he/she is using to solve certain types of problem and therefore cannot possibly deploy those rules in a computer program. But given enough examples, a neural network can work out the rules, although it can no more pass on those rules than we can read someone else’s mind. This can have big benefits in applications such as the early detection of cancer from historic cell images.

A human may not be able to predict that a cell will become cancerous, but if a neural network is presented with thousands of pre-cancerous cell images, it may work out a connection that a human cannot see. However, this strategy requires immense amounts of data and computer power, and will not necessarily help our understanding of the causes of cancer.

Indeed, in some cases, it may even slow up our understanding. Just as using a calculator saves us learning our times-tables, it doesn’t teach us anything about number theory nor turn us into great mathematicians.

In the field of speech recognition, neural networks have led us up a bit of a blind alley. They have produced word recognition performance equalling – if not bettering humans – yet neural networks are still not as good as humans at understanding speech – simply because the human uses so much more in understanding speech than the pure acoustic sound. Ironically, in the general field of pattern recognition, to which speech recognition belongs, it is no longer classed as “intelligent” by many in the field.

There is absolutely no doubt that there is a place for neural networks, but by restricting the definition of artificial intelligence to technologies of this type, we not only discourage researchers from understanding the fundamental science, we also come to rely on extreme resources of computing when often there is a much simpler solution at hand.

I therefore believe that if a machine can fool a human that it is human, then it exhibits artificial intelligence – no matter how it does it. I am with Turing on that.