In light of scandals like Cambridge Analytica, Tor Richardson Golinski, Amelia Harshfield and Advait Deshpande dissect the truths of who decides what we read online

When the Cambridge Analytica scandal broke in early 2018, people were surprised to discover the extent to which their personal data, online behaviours and preferences had been used to target them for political advertising on Facebook.

It prompted outrage and the UK’s Digital, Culture, Media and Sport Committee, which investigated the scandal at the time, expressed concern over the: “relentless targeting of hyper-partisan views, which play to the fears and prejudices of people, in order to influence their voting plans.” This concern over social media profiling and targeting remains in the news, with examples including the claim that YouTube’s algorithms and filtering software may help to ‘radicalise’ viewers, and assertions of algorithms being as influential as policies in the 2019 UK general election campaigns.

YouTube’s algorithms and filtering software may help to ‘radicalise’ viewers,

But does the issue merely lie with how social media platforms use algorithms to deliver content online?

Algorithms are at the core of the decision-making process for social media platforms such as Facebook and YouTube as they determine the content that people see and engage with online. This is designed to maximise user engagement by prioritising content that is most appealing to the users and considered to be most relevant to people’s interests. This filtering is based on user interactions with these platforms and their account and behavioural data. The platforms collect this data continually and use it to help predict users’ behavioural patterns and decision-making processes.

The online platforms use this data to target advertisements, determine the content shown and influence users’ buying decisions. The challenge is not a lack of awareness from users about their data being collected, but rather concerns about what that data can be used for, the sophistication of the algorithms and the accuracy with which they can predict users’ responses and behaviour.

What about the prevalence of social media as a news platform?

As social media has increasingly become the main outlet for people to acquire news and opinion, there also are concerns about what impact algorithm-driven media services may have on the spread of ‘fake news’ or misleading information. Social media platforms allow content to be published by anyone, anywhere.

The speed at which content can be published and shared on social media and its broad reach can aid the rapid spread of disinformation. Research shows that disinformation spreads at a rate six-times higher than that of truthful content on Facebook and Twitter and is often shared by users who have only read the frequently deceiving and attention-grabbing headline.

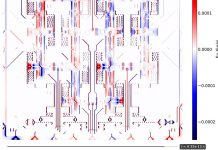

A recent study by RAND Europe and Open Evidence found that algorithmic bias is a product of both the underlying biases of programmers and the biases of users who respond to the content in specific ways. The resulting self-reinforcing feedback loop is one of the main drivers for the dissemination of false and misleading information. While the underlying data and algorithmic decision-making may shape the content seen, its spread and propagation in the mainstream media is based on human selection and reaction to content.

The resulting self-reinforcing feedback loop is one of the main drivers for the dissemination of false and misleading information.

The danger is that algorithm-based services can magnify and intensify human cognitive biases and resultant outcomes, such as confirmation bias and bandwagon effect, when people interpret and remember information in a manner that reflects their existing beliefs or when people base their behaviour on the behaviour of others.

How do social media platforms use content filtering?

Since most social media platforms rely on advertising revenue, providing its user base the content that it most responds to is crucial to ensuring active usage and engagement with the platform services. To do so, the platforms resort to content filtering to show users content that responds to their interests and likes. Such filtering mechanisms can create “echo chambers” and “filter bubbles” when people with perceived similar interests and ideologies group together. Users may become encapsulated in an online ‘bubble’ of a specific type of news corresponding to their interests. This may contribute to ideological segregation between users.

What will happen now?

In response to the threat of disinformation and its perceived ill-effects on democratic discourse, a number of EU member states have launched initiatives, proposals and studies that aim to understand and regulate online platforms. The European Commission states that “greater transparency is needed for users to understand how the information presented to them is filtered, shaped or personalised, especially when this information forms the basis of purchasing decisions or influences their participation in civic or democratic life.”

Algorithms used online are essential to enable relevant connectivity and communications in an increasingly globalised, interconnected world. But online social media content is susceptible to human biases, interference, and challenges of data classification and interpretation on a scale that has not been encountered before.

online social media content is susceptible to human biases […] on a scale that has not been encountered before

The proven and tested approaches to regulation of traditional media are neither designed nor equipped to effectively address the challenges posed by the spread of disinformation on online social media. It could be critical, therefore, to produce a set of solutions to overcome the hurdles associated with social media platforms and their algorithm-driven services, as well as promoting transparency about how they work.

It may not just be down to software platforms to regulate their content; users of social media, and oversight authorities can also have a role by remaining vigilant about their choices and the influence of their decisions in how the online social platforms mediate content.

Research Assistant Tor Richardson-Golinski, Analyst Amelia Harshfield and Senior Analyst Advait Deshpande were part of a RAND Europe joint study looking at media literacy and empowerment issues for the European Commission.